checksum

point-and-click MD5, SHA1 and BLAKE2 hashing for Windows..

The world's fastest hashing application, just got faster!

Welcome to checksum, a blisteringly fast, no-nonsense file hashing application for Windows, a program that generates and verifies BLAKE2, SHA1 and MD5 hashes; aka. "MD5 Sums", or "digital fingerprints"; of a file, a folder, or recursively, even through an entire disk or volume, does it extremely quickly, intelligently, and without fuss. Many people strongly believe it to be the best hashing utility on planet Earth.

Did I say fast? Not only mind-blowing hashing speeds (way faster than even the fastest SSD) but the quickest "get stuff done" time. With checksum you point and click and files, folders, even complete hard drives get hashed. Or verified. Simple. checksum just gets on with the job. Click-and-Go..

Available for 64 bit or 32 bit Windows (a basic Linux/UNIX/BSD version is also included).

Why?

In the decade before checksum, I must have installed and uninstalled dozens, perhaps hundreds of Windows MD5 hashing utilities, and overwhelmingly they leave me muttering "brain-dead POS!" under my breath, or words to that effect, or not under my breath. I always knew that data verification should be simple, even easy, but it invariably ended up a chore.

Either the brain-dead programs don't know how to recurse, or don't even pretend to, or they give the MD5 hash files daft, generic names, or they can't handle long file names, or foreign file names, or multiple files, or they run in MS DOS, or choke on UTF-8, or are painfully slow, or insist on presenting me with a complex interface, or don't have any decent hashing algorithms, or don't know how to synchronize new files with old, or have no shell integration or any combination of these things; and I would usually end up shouting "FFS! JUST DO IT!!!".

No more! Now I have checksum, and it suffers from none of these problems; as well as adding quite a few tricks of its own..

What is it for, exactly?

Peace of mind! BLAKE2, SHA1 and MD5 hashes are used to verify that a file or group of files has not changed. Simple as that. This is useful, even crucial, in all kinds of situations where data integrity is important.

For instance, these days, it's not uncommon to find MD5 hashes (and less rarely now, SHA1 hashes) published alongside downloads, even Windows downloads. This hash, when used, ensures that the file you downloaded is exactly the same file the author uploaded, and hasn't been tampered with in any way, Trojan added, etc.; even the slightest change in the data produces a wildly different hash.

A file hash is also the best way to ensure your 3D Printed propeller blade hasn't been "redesigned" to self-destruct!

It's also useful if you want to compare files and folders/directories; using checksums is far more accurate than simply comparing file sizes, dates or any other property. For quick file compare tasks, there's also checksum's little brother; simple checksum, simply drag & drop Two files for an instant hash-accurate comparison.

If you burn a lot of data to CD or DVD, you can use checksum to accurately verify the integrity of your data right after a burn, and at any time in the future. If you distribute data in any way, maybe torrenteering your favourite things, run a file server of some kind, or just email a few files to your friends; hashes enable the person at the other end to be absolutely sure that the file arrived perfectly, 100% intact.

As well as providing secure verification against tampering, virus infection, file (and backup file) corruption, transfer errors and more, digital fingerprints can serve as an "early warning" of possible media failures, be they optical or magnetic. It was a hash failure that recently alerted me to a failing batch of DVD-R disks; I saved my fading data in time, and got a refund on the disks. I'll leave you to consider the million other uses. There's only one reason, though; peace of mind.

Absolutely no-nonsense file verification..

checksum can create (two clicks, or a drag-and-drop) or verify (one click) hashes of a file, a folder, even a whole disk full of files and folders in one simple, no-nonsense, high-performance operation. Basically, you point it at a file or folder and go! The parameters are controlled by command-line switches, but most folk won't have to worry about that; it all happens invisibly, and is built-in to your Windows® Explorer context (aka "concept", aka "right-click") commands (see above).

Note: while checksum operates with command-line switches, it is NOT a Windows® console application; there's no messy DOS box, or anything like that. But if you want to run it from a console, that's covered, too.

There are a wealth of command-line options, but most people find that checksum just works exactly as they would expect, without any messing about; right-click and go! But, if you are the sort who likes to customize and hack at things, you will find plenty to keep you occupied!

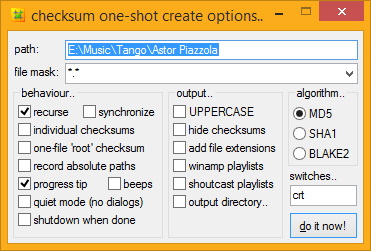

On-the-fly configuration..

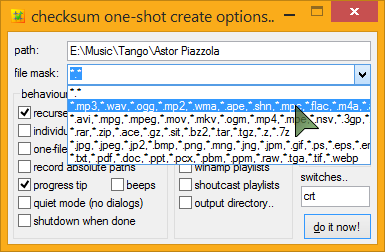

If you want to change any of checksum's options on-the-fly, simply hold down the SHIFT key when you select its Explorer context menu item, and checksum will pop up a dialog for you to tweak the process. If you want to have anything permanently set, checksum comes with standard plain text Windows ini file for you to tweak to your heart's content. Anyone smart enough to use MD5sums, can edit plain text.

The options dialog is most useful when you want to only hash certain files in a folder, like mp3's, or movies. With your file mask groups, you can configure file-type specific hashing with just a couple of clicks.

Common music, video, and archive formats come setup and ready to go, and you can easily edit or add to these at any time.

Common music, video, and archive formats come setup and ready to go, and you can easily edit or add to these at any time.

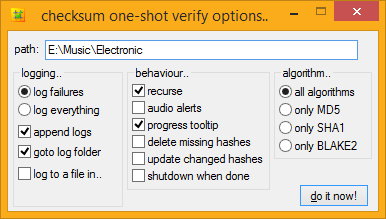

You pop up the options by holding down the SHIFT key when you select the explorer menu item, so it's easy to get to the advanced options whenever you need them. Same goes for verification, though generally you won't need it - checksum is smart enough to just get on with the job, verifying whatever checksum files it finds in the path, be they MD5, SHA1 or BLAKE2, or all of the above, and you'll probably never need to use anything but the default verify command, no matter how advanced you are! And because checksum recognizes other formats of MD5 and SHA1 files (there is no standard BLAKE2 format), it can be used not only to verify and create new checksums, but also verify existing checksum files, even ancient ones, automatically.

I expect there is some weird MD5 file format out there that I don't have an example of, Wang, maybe? but in practice, checksum supports ALL known MD5 verification file formats, that is, known by me. If you find an MD5 file format that checksum doesn't support, send me that file!!

There isn't really a standard SHA1 format yet, but checksum's is pretty good (it's the same as the output from a *NIX sha1sum command in binary mode). Shall we?

100% Portable..

checksum usually operates as a regular installed desktop application with Explorer context menus, custom .hash, .md5, .sha1 and .blake2 desktop icons, Windows start menu entries, and so on; but checksum can also operate in a completely portable state, and happily works from a pen-drive, DVD, or wherever you happen to be; no less than total portability.

Even with its little brother, simple checksum tagging along, the whole lot fits easily on the smallest pen-drive (the 32 bit version will UPX onto a floppy disk!), enabling you to create BLAKE2, SHA1 and MD5 hashes, wherever you are. To activate portable mode, simply drop a checksum.ini file next to checksum.exe (or run one-time with the "portable" switch), you're done.

It's no problem to run checksum both ways simultaneously, or to run checksum in portable mode on a desktop where checksum is already installed. Simply put, if there's a checksum.ini next to it, checksum will use it, and if there isn't an ini there, checksum uses the one in your user data folder (aka. "Application Data", aka. "AppData").

If you like applications to run in a portable state, even on your own desktop, no problem; you can skip the installer altogether and simply copy the files (checksum.exe and simple checksum.exe) to wherever you like. They are in the installer's files/ directory inside the main zip archive. There's also a checksum.ini inside the archive, so you can unzip-and-go.

Note: Regardless of whether you install checksum or run it in a portable state, its functionality is identical.

Introducing.. The Unified Hash Extension™

And Multi-Hashing™..

checksum uses the MD5, SHA1 and BLAKE2 hashing algorithms, and can create .md5 and .sha1 and .blake2 (or .b2 or whatever you use) files to contain these hashes. But checksum prefers to instead create a single .hash extension for all your hash files, whatever algorithm you use. Welcome to the unified .hash extension..

I feel there are quite enough file extensions to deal with, and with some effort on the part of software developers, this may catch on. I hope it does, anyway, and that you agree. A single, unified hash extension looks like the way forward, to me. All comments welcome, below.

As well as being able to verify MD5, SHA1 and BLAKE2 hashes, even mixed up in the same file, checksum can also create such a file, if you so desire. At any rate, if you start using BLAKE2 or SHA1 hashes some day, you can keep your old MD5 hashes handy, inside your .hash files..

The single, unified hash extension gives us not only the freedom to effortlessly upgrade algorithms at any time, without having to handle yet-another-file-type, but also the ability to easily store output from multiple hashing algorithms inside a single .hash file. Welcome to multi-hashing, which will doubtless have security benefits, to boot (a multi-hash is simply collision-proof).

Lightning fast..

If you do a lot of hashing, you will know that it's an intensive process, and relatively slow. Well, checksum is fast, lightning fast.

Even on my old desktop (a lowly 1.3GHz, where checksum was initially developed) it would rip through a 100MB file in under one second. The latest checksum can crunch data faster than any hard drive or even SSD can supply it. Hashing your average album or TV episode is instantaneous.

With right-click convenience, intelligent recursion and synchronization, full automization, and crazy-fast hashing speeds, digital fingerprinting is no longer a chore, it's a joy!

Okay, I'm getting carried away, but seriously, this is how hashing was always meant to be.

Features..

If you like lists, and who doesn't, here's a list of checksum's "features", as compared to your average md5 utility..

True point-and-click hash creation and verification..

No-brainer hash creation and verification. In a word; simple.

Choice of MD5, SHA1 or BLAKE2 hashing algorithms..

Create a regular MD5sum (128-bit), or further increase security by using the SHA1 algorithm (160-bit). For the ultimate in security, you can create BLAKE2 hashes (technically, BLAKE2s-256, which kicks the SHA family's butt in both security AND hashing speed). checksum recognizes and works with all these formats, even mixed up in the same file.

hash single files, or folders/directories full of files.. no problem..

checksum can create hash files for individual files or folders full of files, and importantly, automatically recognizes both kinds during verification, verifying every kind of checksum file it can find. Also, when creating individual hash files, checksum is smart enough to skip any that already exist.

Effortless recursion (point at a folder/directory or volume and GO!) ..

Not only fully automatic creation and verification of files, and folders full of files, but hash all the files and folders inside, and all the folders inside them, and so on, and so on, through an entire volume, if you desire.. one click! ... Drive hashing is now officially EASY!

LONG PATH support..

All checksum's internal file operations use UNC-style long paths, so can easily create and verify hashes for files with paths of up to 32,767 characters in length. Goodbye MAX_PATH!

Full UNICODE file name support..

checksum can work with file names in ANY language, even the tricky ones like Russian, Arabic, Greek, Japanese, Belarusian and Urdu. checksum can also handle those special characters and symbols that lurk inside many fonts. In short, if you can use it as a Windows file or folder name, checksum can hash it!

"root", folder or individual file hashes, your call..

Some people prefer hashes of folders, some prefer "root" hashes (with an entire volume's hashes in a single file). Some people like individual hashes of every single file. I like all three, depending on the situation, and checksum has always been able to do it all.

Email notifications..

checksum can mail you when it detects errors in your files; especially handy for scheduled tasks running while you are away or otherwise engaged. checksum's Mail-On-Fail can do CC, BCC, SSL, single and multiple file attachments (including attaching your generated log file), mail priority and more.

Multiple user-defined file mask groups..

For instance, hash only audio files, or only movies, whatever you like, available from a handy drop-down menu. All your favourite file types can be stored in custom groups for easy-peezy file-type-specific hashing. e.g..

The most common groups are already provided, and it's trivial to create your own. You can also enter custom masks directly into the one-shot options, e.g. report*.pdf, to hash all the reports in a folder, create ad-hoc groups, or whatever.

Automatic music playlist creation..

Another killer feature; checksum can create music playlist files along with your checksums! When creating a folder hash, if checksum encounters any of the music files you have specified in your preferences; mp3's, ogg files, wma, whatever; it can create a playlist for the collection (i.e.. the album). Rather nifty, and a perfect addition to the custom command in the tips and tricks section.

As well as regular Windows standard .m3u/m3u8 playlist files (Winamp, etc.), checksum also supports .pls (shoutcast/icecast) playlists.

Effortlessly handles all known** legacy md5 files..

If you discover an MD5sum that checksum doesn't support, Send Me That FILE!

Create lowercase or UPPERCASE checksums at will..

Like many things, this can also be set permanently, if you so wish.

Automatic synchronization of old and new files..

Automatically add new hashes to existing checksum files.

That's right! Automatically add new hashes to existing checksum files!

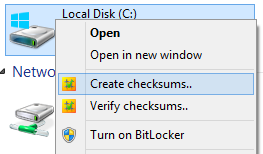

Integrated Windows® Explorer context (right-click) operation..

The installer will setup Windows® Explorer context commands for all files and folders, so you can right-click anything and create or verify checksums at will. Very handy. "setup", the rather clever installer, is also available in its own right, as a free, and 100% ini-driven installer engine for your own goodies. Stuffed with features, easy to use, and definitely deserving a page to itself. Soon.

As explained above, you can also bypass the installer altogether, and simply unzip-and-go, for 100% portable checksumming. Or you can have both.

Scheduler Wizard..

One of checksum's special startup tasks is a Scheduler Wizard, which will guide you simply through the process of creating a checksum scheduled command in Windows Task Scheduler.

Click a few buttons, set your preferences in the familiar one-shot options dialog, and go!

No-fuss intelligent checksum verification..

Cut and paste your own checksum files if you like, rename them, mix and match legacy MD5 formats in a single file, even throw in a few SHA1 or BLAKE2 hashes just for fun; worry not; checksum will work it out!

Permanently ignore any file types..

Obviously we don't want checksums files of checksum files, for starters, but if you have other file types you'd like on a permanent ignore, desktop.ini files, thumbs.db, whatever; it's easy to setup. The most common annoying file types already are.

Ignored folders..

As well as a set of permanently ignored folders (like "System Volume Information", $RECYCLER, and so on) you can set custom ignore masks on a per-job basis, using standard Windows file masks, e.g. "foo*" or "?bar".

Real-time tool-tip style dynamic progress update..

Drag it around the screen - it snaps to the edges, and stays there (checksum also remembers its dialog screen positions, for intuitive, fast operation).

Tool-tip progress can be disabled altogether, if you wish.

Right-click the Tooltip for extra options.

During verification, any failures can be seen real-time in a system tray tool-tip, hover your mouse over the tray icon for details. checksum also flashes the progress tooltip red momentarily, and (optionally) beeps your PC speaker, to let you know of any hash failures. If there were errors, the final tooltip is red (by default). Anything to make life a bit easier.

Verify a mix of multiple (and nested) MD5, SHA1 and BLAKE2 checksum files with a single command..

Does what it says on the can!

Extensionless checksum files..

Traditionally, individual checksum files are named filename.ext.md5. Personally, I find this inelegant, and prefer them to be named filename.md5. I like it so much, I made it the default, but you can change that, if you like. When running extensionless; if checksum encounters multiple files with same name, it simply adds them to the same checksum file, so checksums for foo.txt, foo.htm, and foo.jpg would all go inside foo.md5, or better yet, foo.hash. Highly groovy.

On the verify side of things, checksum has always verified every possible checksum it can find, so these multi-hash file look just like regular folder hash files, and verify perfectly, so long as the data hasn't changed, of course!

Search & Verify Single Files..

With checksum, you can verify a single file, anywhere in your system, from anywhere in your system, regardless of where its associated .hash file is in the file tree, be it in a folder or root (aggregate) hash.

checksum will search up the tree, first looking for matching individual .hash files, and then folder hashes, all the way up to the root of the volume until it finds one containing a hash for your file, at which point it will verify that one hash and return the result. Another fantastic time-saver!

This works best as an explorer context menu command (supplied).

Smart checksum file naming, with dynamic @tokens..

checksum file names reflect the actual files or folders checked! Automatically.

If you want more, you can specify either static or dynamic checksum file names, with a wide range of automagically transforming tokens. See below for details.

Report Changed/Corrupt/Missing States..

checksum can optionally store a file's modification date and time along with the checksums, like so..

#md5#info.nfo#2009.09.26@19.49:36

5deee1f6ac75961d2f5b3cfc01bdb39c *info.nfo

Thanks to the extra information, during verification checksum will report files with mismatched hashes as either "CHANGED" (they have been modified by some user/process) or "CORRUPT", where the modification time stamp is unchanged.

These will show as a different color in your HTML logs.

You can choose whether or not to report (and log) missing, changed, or corrupted files. For example, if you only want to know about CORRUPT files, but don't care about changed or missing files, you would set..

report_missing=false

report_changed=false

report_corrupt=true

As one commenter (below) pointed out, with this sort of functionality, checksum would become "the only tool against silent data corruption". I believe this goal has now been achieved.

The chosen algorithm is also stored along with this information, for possible future use (aye, more algorithms!).

Automatically remove hashes for missing files..

Stuff gets deleted, on purpose; fact of computing life. When verifying your hashes, you can have checksum remove those entries from your .hash file automatically, so you never have to think about them again!

The number of deleted hashes, if any, is posted in your final notification.

Automatically update hashes for changed files..

Files gets mindfully altered; another fact of computing life - MP3's get new ID3 tags, documents get edited, and so on. Now you can have your hashes updated, too! That's right! During verification, you can instruct checksum to automatically update (aka. "refresh") those entries (and their associated timestamps) inside your .hash file. No more editing required!

The number of updated hashes, if any, is also posted in your final notification.

Effortless hashing of read-only volumes..

checksum can create BLAKE2, SHA1 and MD5 hashes for the read-only volume, but store the checksum files elsewhere; either with relative paths inside; so you can later copy the checksum file into other copies of the volume, or absolute paths; so you can keep tabs on the originals from anywhere.

checksum currently has three different read-only fall-back strategies to choose from; use whichever most suits your needs.

Extensive logging capabilities, with intelligent log handling and dynamic log naming..

checksum always gives you the option to log failures. But you can log everything if you prefer. hashing times can be included in the logs, and proper CSS classes ensure you can tell what's-what at a glance.

Relative or absolute log file path locations can be configured in your preferences, as can the checksum log name itself; with dynamic date and time, as well as dynamic location and status tokens, so you can customize the output naming format to your exact requirements.

In other words, as well leaving it to checksum to work out automatically, or typing a regular name into your prefs, such as "checksum.log", you can use cool @tokens to insert the current..

@sec... seconds value. from 00 to 59

@min... minutes value. from 00 to 59

@hour... hours value, in 24-hour format. from 00 to 23

@mday... numeric day of month. from 01 to 31

@mon... numeric month. from 01 to 12

@year... four-digit year

@wday... numeric day of week. from 1 to 7 which corresponds to Sunday through Saturday.

@yday... numeric day of year. from 1 to 366 (or 365 if not a leap year)

There is also a special token:

@itemwhich is transformed into the name of the file or folder being checked, and@status, which automatically transforms into the current success/failure status.

You can mix these up with regular strings, like so..

log_name=[@year-@mon-@mday @ @hour.@min.@sec] checksums for @item [@status!].log

The @status strings can also be individually configured in your prefs, if you wish. Roll the whole thing up, and with the settings above, the final log name might look like..

[2007-11-11 @ 16.43.50] checksums for golden boy [100% AOK!].log

HTML logging with log append and auto log-rotation..

As well as good old plain text, checksum can output logs in lovely XHTML, with CSS used for all style and positional elements. With the ability to append new logs to old, and auto-transforming tokens, you setup automatic daily/monthly/whatever log rotation by doing no more than choosing the correct name. You can even have your logs organized by section and date, all automatically; via the free-energy from your @tokens.

Click here to see a sample of checksum's log output, amongst other things.

Exit Command..

checksum can be instructed to run a program upon job completion. It can also pass its own exit code to the program.

Total cross-platform and legacy md5 file format support..

Work with hidden checksums..

If you don't like to see those .hash files, no problem; checksum can create and verify hidden checksum files as easily as visible ones. Like most options, as well as on-the-fly configuration via the options dialog (hold down SHIFT when you launch checksum), you can set this permanently by altering checksum.ini.

To create hidden checksums (same as attrib +h), use "h" on the command-line, or choose that option from the options dialog.

Don't worry about creating music playlists with the invisible option enabled, the playlists will be perfectly visible, only the checksums get hidden! (well, someone asked! ;o)

"Quiet" operation..

Handy if you are making scheduled items, etc, and want to disable all dialogs and notifications. Simply add a 'q' (or check the box in the one-shot options).

You can also set checksum to only pop up dialogs for "long operations". Just how long constitutes a long operation, is of course, up to you. The default is 0, so you get "SUCCESS!", even if it only took a millisecond. Check your preferences for many more wee tricks like this.

"No-Lock" file reading..

checksum doesn't care is a file is in-use, it will hash it anyway! And it won't lock your files up while it's doing it. Feel free to point checksum at any folder.

Audio alerts..

Unrelated to the "quiet" option (above), checksum can thoughtfully invoke your PC speaker to notify you of any verification failures as they happen, as well as shorter double-pips on completion (if your PC supports this - many modern PCs don't). You can even specify the exact KHz value for the beeps, whatever suits you best.

You can also assign WAV files for the success and failure sounds, if you prefer. A few samples can be found here.

Drag-and-drop files, folders and drives onto checksum..

If you prefer to drag and drop things, you can keep checksum (or a shortcut to it) handy on your desktop/toolbars/SendTo menu, and drag files or folders onto it for instant checksum creation. This works for verification, too; if you drag a hash file onto checksum, its hashes are instantly verified.

Note: like regular menu activation, you can use the SHIFT key to pop-up the options dialog at launch-time. You can also drag and drop files and folders onto the one-shot options dialogs, to have their paths automatically inserted for you.

User preferences are stored in a plain text Windows® ini file..

You can look at it, edit it, back it up, script with it, and handle it. Lots of things can be tweaked and set from here, though 99.36% of people will probably find the defaults are just fine, and the one-shot option dialogs handle everything else they could ever need. But if you are a more advanced user, with special requirements, chances are checksum has a setting just for you. Click here to find out more about checksum.ini

Comprehensive set of command-line switches..

Normally with checksum, you simply click-and-go; but checksum also accepts a large number of command-line switches. If you are creating a custom front-end, modifying your explorer context menu commands, or creating a custom scheduled task or batch file, take a look at checksum's many switches. For lots more details, see here.

If you simply have some special task to perform, it can probably be achieved via the one-shot options dialog.

Shutdown when done..

If your system doesn't normally run 24/7, don't let that stop you from hashing Terabytes of data! checksum can be instructed to shutdown your PC at the end of the job.

That's a lot of features! And it's not even them all!

checksum is jam-packed with thoughtful little touches, you might even call it Artificial Intelligence! Wherever possible, if checksum can anticipate and interpret users, it will.

![]()

Legacy and cross-platform MD5/SHA1 file formats that checksum can handle..

If you look inside any MD5/SHA1 checksum file - it's plain text - you find all sorts of things.

Here's what a regular (MD5) checksum file looks like..

01805fe7528f0d98c

Each line begins with the MD5/SHA1 digest (hash), followed by a space, then an asterisk, then the filename. It's a clear format, flexible, relatively fool-proof ("*" is not allowed on any file system), and well supported.

Other formats I've come across..

single file single MD5/SHA1 hash types - these necessarily have the same name as the file, with ".md5" or ".sha1" extension added, and are often hand-made by system admins, or else piped from a shell md5/sha command) ..

01805fe7528f0d98c

4988ae20125db8071

space delimited hashes (before we figured out the clever asterisk)..

01805fe7528f0d98c

4988ae20125db8071

double-space delimited hashes (just silly, really)..

Believe it or not, this is the de-facto standard for md5 files, mainly because it's the output from the UNIX md5sum/sha1sum command in 'text' mode, which amazingly; is the default setting. By the way; md5sum's "-b" or "--binary" switch overrides this insanity.

01805fe7528f0d98c

4988ae20125db8071

TAB delimited hashes (I am assured these do exist!)..

01805fe7528f0d98c

4988ae20125db8071

back-to-front hashes in parenthesis - this is quite a common format around the UNIX/Solaris archives of the world (it's the output from openssl dgst command) ..

MD5(01 - Stygian Vista (radio controlled).mp3)= 01805fe7528f0d98c

or..

MD5 (01 - Stygian Vista (radio controlled).mp3) = 01805fe7528f0d98c

even..

SHA1(01 - Stygian Vista (radio controlled).mp3)= 4988ae20125db8071

checksum supports verification of all these formats with ease, so feel free to point it at any old folder structure, Linux CD, whatever, or any .md5 or .sha1 files you have lying around, and get results.

And in case the above track names got you googled here, yes, checksum also works great in Microsoft® Vista, and Windows 7, Windows 8, Windows 8.1, Windows 10 and Windows Server of course, even XP! ;o)

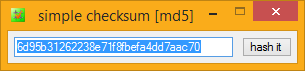

simple checksum

Supplied along with checksum is checksum's little brother app, "simple checksum", a supremely simple, handy, free, and highly cute drag-and-drop desktop checksumming tool utilizing checksum's ultra-fast hashing library; for all those "wee" hashing tasks..

Drop a file onto simple checksum, get an instant MD5, SHA1 or BLAKE2 hash readout.

Drop two files, and get an instant MD5, SHA1 or BLAKE2 file compare.

Drop two folders, and get a hash-perfect folder compare (using checksum as the back-end).

Drop a file onto simple checksum with a hash in your clipboard, get an instant clipboard hash compare.

And that works from your "SendTo" menu, too (select two files - SendTo simple checksum.. instant file compare; send two folder, get a hash-perfect folder compare), as well as drag and drop onto simple checksum itself, or a shortcut to simple checksum.

Packed with intuitive HotKeys and time-saving automatic settings, simple checksum is Handy Indeed!

And simple checksum is COMPLETELY FREE, as in beer. Check it out..

download

Download and use checksum, for free..

click to see zip archive contents

# made with checksum.. point-and-click hashing for windows (64-bit edition). # from corz.org.. http://corz.org/windows/software/checksum/ # #md5#checksum.zip#2015.07.04@01.26:25 024f061d2262d95d0864fa558fd938f9 *checksum.zip #sha1#checksum.zip#2015.07.04@01.26:25 199ef31f91c06786a05eeead114c026a67426488 *checksum.zip

click to see zip archive contents

# made with checksum.. point-and-click hashing for windows (64-bit edition). # from corz.org.. http://corz.org/windows/software/checksum/ # #md5#checksum_x64.zip#2015.07.04@01.26:28 72e1cac7bd2dfd4ce3cf862920350bfa *checksum_x64.zip #sha1#checksum_x64.zip#2015.07.04@01.26:28 86d8db98f96b5c8e196594667b9d324e066f4215 *checksum_x64.zip

NOTE: If your Anti-Virus software detects anything in this software, I recommend you switch to an Anti-Virus that isn't brain-dead. If you DO discover an actual virus, malware, trojan, or anything of that nature inside this software, please mail me, and I will send you a cheque for a Million Pounds, as a reward. In other words, this software is clean.

These guys agree..

(note: I've now removed checksum from most of these sites!)

(Ahh.. The beauty of PAD Files!)

License Upgrade

If you need to upgrade your ancient license to the new format (checksum v1.3+) go here.Itstory..

aka. 'version info', aka. 'changes'..

This is usually bang-up-to-date, and will keep you informed if you are messing around with the latest beta, and let you know what's coming up next. Note: it was getting a bit long to include here in the main page, so now there's a link to the original document, instead..

You can get the latest version.nfo in a pop-up windoid, here, or via a regular link at the top of this page.

Leave a comment about checksum..

If you think you have found a bug, click here. If you want to suggest a feature, click here. For anything else, feel free to leave a comment below..

Will checksum do a hash of a complete drive as well? Is there a size limitation?

Situation: I want to confirm an accurate duplication of a hard drive.

Also, can it create a printout to report time and date and the two hashes?

I don't know what you mean about "the two hashes" (each file gets only one), but apart from that..

Yes, complete drives are no problem, I hash entire DVD's regularly. I recently checksummed my entire archive drive, too (160GB)

No, there is no size limit, at least, theoretically. I've not tried anything much over 1.5GB, but there shouldn't be any problems hashing even really huge files.

Logging only takes place on verification, but yes, you can tell checksum to log every single item, success or fail, and the log is always timestamped, looks something like this..

Send me a mail; I'll be putting out the latest beta some time this week (all those who have previously mailed will get something in their inbox very soon) so you can play with it, and let me know how big it really goes.

for now..

;o)

ps. about the "two hashes", do you mean, the original drive, and the duplicated drive? I suspect so. The way to do it is simply run checksum on the drive before duplication, and then afterwards, run checksum again (in verify mode) on the duplicate drive. The checksum files will have been duplicated along with the drive. Keeping the checksum files along with the real files is the best method, by far.

hello,

very interesting what you wrote down here, what do I need to do, in order to get a copy of your program :-)

currently I'm using md5summer (http://www.md5summer.org/download.html) to get the job done, but your app looks much more powerfull.

hope you release it as GPL?

Best regards from berlin/germany

- Phil

I agree, Phil! Very interesting indeed!

How do you get a copy? Join the "ßeta program", unless you are running XP, I have enough XP testers! However, I am keen to enroll someone running Vista - mail me for details.

You're right, checksum looks much more powerful. That's because it is. Nothing even comes close. From a recent mail from one of the beta testers (all hardened MD5 utility users)..

I love that quote!

I already have a bag of similar quotes, and it's not even released!

Guys! Post them HERE, eh!

No.

Ironically, all my other apps are open source, and though they get many downloads, source packs, too; no one ever mails me about them, or offers their appreciation and thanks, or asks about the licensing terms - copy+paste is easier. Okay, my /windows area hasn't been up for long, but still; already THREE people have asked me if I plan to release the code for checksum! What does this tell you?

But no. I plan to keep this one all to myself. I know from experience that when you release code, it just gets ripped off, and folk go on to claim it as their own (I've got loads of php 'out there', thinly disguised as other things). Almost never will you get actual credit. I don't mind too much, I do it for me, and release it because I can, and have a knack for readable documentation.

With checksum, I decided that there was just so much cool stuff going on inside, no way was I going to let someone come along and just steal it. It's MY baby!

The other reason is that I plan to charge for the full version (the free version will be MD5-only) because I'd like this place to start making some cash! An artist's gotta eat, you know.

for now..

;o)

I just came across another app that gets the job done:

www.slavasoft .com/fsum

While I can understand, that you hope to get some ca$h, but the problem I think that every security related application has to be OpenSource so that everybody can check the source-code if there are any threats.

But of course I can also understand if you want to earn some money - hope it doesn't cost too much, but from what I understand there will be also a free version, nice.

I sometimes use paypal to honor good work from people, releasing their work for free.

keep us informed about future updates - if you're interested in beta-testing for win2k, drop me a line.

- Phil

www.slavasoft .com/fsum

One of many many many that will "do the job", but not in a way that this human finds acceptable. And judging by my inbox recently, many other humans, too.

No. Checksum implements widely-known, open-source algorithms. There's nothing inherently secure or insecure about checksum itself, so these sorts of concerns would be completely misplaced.

Both the MD5 and SHA1 algorithms are not only open source, they are public domain - feel free to check their robustness at any recognized code archive, as well as checksum's ability to 100% comply with their specifications using the reference checksums provided at MIT, wikipedia, etc.

Even if I wrote the loosest, most insecure code on the planet (which I don't), it wouldn't affect anyone's security in the slightest, so long as the checksum's themselves are 100% accurate, which they are. Bottom line: the only person that needs to see the source, is me.

Yes, I do! I've been releasing open source code for years, with tens of thousands of users, yet I could count the donations on one hand. But in spite of that, yes, there will be a free version. The only difference; the free version will do MD5 only. For the vast majority of people, md5 is all they need, so even with the SHA1-enabled payware version, it will still be a miracle if I can cover my domain fees!

Simple checksum (included, and also free) works with SHA1 as well as MD5, though on a one-shot basis. checksum "pro" (haha!) will be around £10.

You are, quite literally, one in a million! Feel free to browse around the corz.org/engine !

The itstory (aka. "changes") above these comments, is dynamically included, and sometimes you can quite literally watch it grow, live (with a page refresh, anyway). If something happens, it'll be there.

I'll see if if I can find your email address in the list (I'm assuming you entered it at some point), because I'd like to know if everything's okay on Win2K. I today got confirmation that it's working swell on Vista, so 2K would be the Hat-Trick.

Thanks!

;o)

I just came across another app that gets the job done:

www.slavasoft .com/fsum

Hey phil,

FSUM was one of the many hash checking utilities I've tried during my quest to find the perfect one. Although it's a very good one, it simply cannot handle the types of "jobs" I've been able to accomplish with checksum.

I've written advanced batch scripts for FSUM which have greatly enhanced its "out-of-the-box" capabilities but even then it doesn't handle certain situations as well as checksum does.

If you just need a program that will let you make a checksum file for certain files/folders, or a program that will let you verify a single checksum here and a single checksum there whenever you need to, then I agree with you, FSUM will get the job done. But if you need something more powerful, then you will love checksum.

And just when you think it can't get any better, it gets better! cor is always adding new features and making improvements in one way or another. checksum really is great software and I highly recommend it.

-seVen

I agree with seVen!

And just when you thought it couldn't get any better.. Along comes spiffy HTML Logging!

Here's a sample of the output..

https://corz.org/windows/software/checksum/files/checksum-example-log-output.html

;o)

When will checksum leave the beta-testing-phase ... I'm just curious to try it out myself and I want to make sure, that I really don't need to look any further for another checksum-utility.

The current version is bug-free, as far as most of my testers are concerned - but actually contains a couple of beauties. They only occur under unusual circumstances, but still, it will need a little more work yet before a general release.

I'm tied up for the next couple of weeks, so it's unlikely I'll be fixing them until after that, but if all goes to plan, the release version will hit this page at the start of the new year.

Something to look forward to, eh!

;o)

ps. about the source (from earlier) I meant to mention, I am referring to the checksum program source; not the actual MD5/SHA1 routines in the hashing dll; the source for which you can easily check by following the credits in the dll's version information (via Explorer properties dialog).

Excuse me,

how can I download "checksum" program?

This would be an absolutely awesome program to integrate into a file level scan for a file copy/compare process. Parsing large (> a TB) volumes of millions of small files could largely benefit by a file system scan via hashing. It would allow the user to create a hash, copy all files to a destination as a backup, then the following day another run could be commenced copying the differential data to the destination, but the origin file system could be scanned via hash rather than a scan and compare operation. Sounds fast anyway.

Any updates on a release date?

If you have a feel for a release date, please let us know. Really interested in seeing checksum and simple checksum in operation.

Cor,

Your beta history section lists version 0.9.19

on 4th Jan, but nothing past v0.9.18.1b seems

to be available...

-thm

There's a bit about this in my /blog/.

In short, I'm just back from a rather long tech-break (though sadly not a holiday!) and checksum is definitely on the list of jobs for March.

I've decided to just release checksum for free (actually, "shirtware" - see recent blog for more details), and the next checksum job is to rip out the payware code and run some tests. There will be one more beta release (rc1) in a week or two (God-willing) for the beta testers (at least, those that mailed me along the lines of "hey! my beta ran out!"). If all the reports come back favourable; a proper release will follow soon after.

In the meantime, those who haven't read the itstory are urged to do so (starting at the bottom!); not just to appreciate the tremendous amount of hard work that's gone into checksum, but also to get a handle on its many features, some of which aren't documented elsewhere.

for now..

;o)

I'm so sad. I spend all that time reading the page and getting excited about checksum...and it's in closed beta testing.

Boooo

In the trade, we call this "building anticipation".

;o)

Glad to hear about this project! Like many others, I've been persistently irritated with current checksum tools.

Are you planning to add SFV support down the line? I know there are good current utilities for Windows but it'd be nice to get them all in one reliable package.

I haven't even considered SFV support; basically because it's fairly worthless. An "upgrade SFV checksums to MD5/SHA1" might be doable. Creating SFV, however, is not on the cards.

;o)

ive been waiting for this since last year. any news on the release cor??? i think youve built up enough anticipation.

Hey, if you're that desperate, mail me, and I'll throw you in with the beta testers!

;o)

Hey, I have a question. I know that MD5 software can usually be used to check the contents of a burned data DVD against a MD5 file. But what I'm interested in is checking burned DVDs as a whole. Like in ImgBurn, when you go to verify a previously burned DVD against the original ISO file, and it shows you that the MD5 checksums of each of them match. I'm looking for a way to do the same thing, except using a MD5 instead of an ISO file for verification. Will your program work for that?

cor, i sent you an email from a gmail account. look out for it.

galva12, what you are trying to do sounds, to me, like a poor second-cousin to what checksum actually does. It also sounds like you are keeping the old ISO around, which would be a huge waste of space.

Doing things the checksum way gives you way more flexibility - you can check every file on the disk with a single checksum file, or one checksum for each file, with the checksums on the disk, or elsewhere, or both. If you need to know the status of a single file, or all files, you can do that.

I don't see any advantages to the ImgBurn method. In fact, I think an md5 which changes because dates and times of file access change, if a recipe for user confusion. No, checksum won't be going there!

Having said that, you could do what you want with checksum, by simply ripping an ISO of the disk at any time, and comparing it to the original iso md5.

Thanks noname, I'm still catching some up after my birthday celebrations, but I did get it your mail. Expect a reply soon.

;o)

I didnt know you had your birthday recently cor.

Hey cor, thanks for replying. I don't think I explained what I'm trying to do properly though. As you said, keeping the ISO files around would be a massive waste of space, which is why I'd rather verify my burns after the fact using premade MD5 files.

I'm dealing with images that don't contain just easily-accessible data files though; they're in some kind of convoluted format that makes it impossible to scan them file-by-file; they aren't listed in Windows explorer. I need a checksum of the DVD image as a whole.

In ImgBurn, when you verify a DVD it's shown that the checksum of the original ISO file is identical to that of the burned DVD. I'm just looking for a way to cut the ISO out of it, and use a premade checksum of the ISO instead. It's no task to get the ISO's checksum, but I haven't yet found any method to get the checksum of an entire DVD, as an image and not file-by-file. I wonder how ImgBurn does it?

It simply reads the disc into an ISO image. You can do this with most burning tools; Nero, MagicISO, etc. Once you have your image, you can checksum it, and compare it with the original ISO md5.

ImgBurn probably just hashes-as-it-reads, which is kinda slick, as you don't have to dump the ISO file anywhere, missing out the middle step.

;o)

ps. cheers defier!

I'll be emailing you in a few minutes. I have tried all of the software listed at these two sites

http://en.wikipedia.org/wiki/Comparison_of_file_verification_software

http://koolmonkey.bravehost.com/sfv-md5.html

and even the ones that cost money are not very good (I can't afford them anyway). I am religious about the security of my data, and I the only good piece of software I've been able to find is ViceVersa (http://www.tgrmn.com/index.htm), which does automatic backups as soon as you edit a file, and then it does a CRC32 check to make sure the backup is good. The advantage ViceVersa has over a RAID 1 mirroring setup is that ViceVersa also keeps old copies in an archive. I can't tell you how many times I've spent two days editing a file, and then realized that the version I had 3 days (or months) ago was much better, and I was glad that VV kept the old copies for me. It's not CVS versioning or revision control, but it's good enough for just me on my machine, while I work on something that may have come from a revision control system (RCS) like Subversion or SourceSafe.

Anyway, I've got backup reasonably solved with ViceVersa. Now I need Checksum for general purpose use, like the times when I need to make a manual backup copy. Gimme? Beta? Please? Desperate!

I got that, ta.

I feel the same way about my data; more and more people do. My approach was to bug the developer of my favourite text editor to add comprehensive backup facilities, and now I have a folder with every single version of every single thing I've worked on, timestamped in neat directory-named folders. Pretty cool, though sometimes it does get HUGE, and needs to be archived. Suits me fine. Btw, surely you mean RAID5.

As to the checksum applications, yup, they're all crap!

I just put out rc1 to the beta testers on Wednesday. Early feedback..

I love it, love it, and love it... Bravo!

..and as I work on rc2, I'm looking to widen the net some; let a few more folk try it. There will be no more free licenses, but as I'm putting it out as Shirtware, I guess that's less of an incentive, anyway.

Look forward to some juicy download details in your inbox in the next few minutes. All feedback welcome.

;o)

I like raid 1, since it's not about write speed for me, just cheap redundancy with only 1 extra disk. My text editor does backup too, but I just let viceversa handle it, since it'll back it up as soon as I change something.

Yup, that's what Editplus does. Every single change creates a new backup. If you enable it, that is.

Ahh right.. RAID1. For some reason I get RAID0 and RAID1 mixed up. I don't use either, is probably why.

;o)

What's up cor!

Glad to see checksum is close to being released to the public! To tell you the truth, it's so good that it makes me feel guilty that I (as a beta tester) get to use it daily while the rest of the world has to deal with the pains of using inferior hash-checking software.

Don't worry guys, hopefully you will all have it soon. Only then will you truly understand

Yes, we're making the world a better place for our children, and our children's children! Hopefully we can handle the pain of guilt for a wee while longer, yet!

Hey! I did add another batch of testers to the latest release (rc2), yesterday. Anyone who has mailed over the last few months about testing checksum should definitely check whatever address they used - there could be juicy download details waiting for you right now!

I'm thinking I might go semi-public with rc3. I've been messing around with my mailer this week, getting it ship-shape to handle the thousands of folk who have signed up for "corz odd mailing" (a list I've not used yet) with a view to letting them have a crack at checksum before a full release. But it's slow going, and I keep finding reasons to do something else instead. I might just install PHPList and be done with it!

Anyways, in ever increasing circles, it's coming folks, and real soon.

;o)

The only checksum utility I could find on the net.

Thanks a lot

I just read through the changelog. It looks like checksum is getting a lot of work done on it to polish it up for a release. Thank you very much for all the work you've done.

Yes! And so far only a single bug report for rc3 (which is already fixed).

So it's looking like Real Soon Now!

;o)

This is just what I've been looking for! I can finally replace the non-recursive QuickSFV

As for speed I really can't see how you can improve much. On PCs today you're usually limited by disk I/O rather than processing power. Not that less cpu usage isn't welcome

Can you compare two directories with this tools without generating md5 files everywhere? E.g. to verify that a file copy has been done without errors.

Any thoughts on including par2 support or some other repair capable algorithm in the future?

Enough nagging

Thanks for using your spare time to make this. If it works as well as you say I'll recommend it to everyone I know, and I'll definatly donate some money.

Para Noid, thank you! Yes! I've been working on it whenever possible, check out the itstory for details - the last thing I wanted before calling it 1.0, and letting it out, was a "unified hash extension", as I like to call it. I'd already done the tricky bits, teaching checksum how to interrogate files line-by-line, and not give a damn about the file extension. Who knows what hashes might be in future files, and one more file extension is quite enough!

I got that done a couple of nights ago, and the unified .hash extension is with us now; checksum phase two, complete. Pretty new icons and a funky PAD file followed suit.

As for speed, yes, I had a similar conversation with a beta-tester, and it's totally true; utilizing 100% cpu for a file hash is, for now, a pipe-dream, and file system I/O is always the deciding factor. Having said that, it's nice to get a file into ram and just go Woosh! See that's possible. It is rather fast, and the the aforementioned conversation is definitely at back of the most recent batch of speed improvements - I had to get it into the top-three! And as storage devices get faster, so will checksum!

Ideally, I get T-Shirt Three-of-Three ready, and then do one grueling night of checksum promotion, dropping blogs, signing up with download sites, dropping a link right here being the one I look forward to most. This page needs some completion! Especially since the beautiful search engines of the world are already sending people.

But Three-of-Three is "The Amazing Metaphysical Traveling Map", and for years it has caused me no end of grief, refusing to settle itself into one simple design. I literally have hundreds of sketches, the concept being more important, of course, but design is important, too, as any user, even potential user of checksum appreciates. I'll get there, but if I don't nail it within a week; that and an even smarter way to "upgrade" multiple hash files to the new unified hashing scheme, then it's getting released anyway.

A week, tops.

Well, they wouldn't go everywhere! Just inside the directories in question. I know what you mean, though. No. checksum makes real hash files*. However, it will happily work with hidden checksum files, creating or verifying them, so you don't have to see them if you don't want to**. Having said all that, hash files a) are rather reassuring, sitting there, and b) have a really cute new icon, took me ages!

It also seems wrong, somehow, to not keep the hash data. We used CPU cycles to create something, a state of things, captured. Why not keep that data? I like to think that we'll all get used to living with hashes. They are so small and beautiful, and perfect! "Perfect until your data isn't!". Keep 'em! I say!

All I know of Par is that you make tiny wee extra files that somehow can be used to re-create missing parts of very large files, usually Rars. It seems to defy the laws of physics, even though I'm reliably informed that it's all true. Which is to say; No, I haven't considered it. I'm not at that stage with PAR, yet, and even if I was, I'm not sure it's something checksum should be doing, anyway. Of course, I'll happily accept enlightenment on this topic!

Keep 'em coming!

;o)

ps. I had to dash out in the middle of that post, sorry about that!

For quick file-compare tasks, I more usually use simple checksum, checksum's wee brother. And the more I think about it, the more I think that if I added these capabilities anywhere, it would more likely be to simple checksum.

** I used to always keep hidden files visible in my desktops, but more and more these days, I like to keep hidden things hidden - I did a macro to hotkey between the two states, when required.

I totally agree with your blurb. Making MD5s was always a pain! I Have been checking this page regularly for a couple of weeks since I first found it. It's great to see checksum released at last! AWESOME! It's exactly what I've been looking for!

Thank YOU!

JkR

p.s. It was a bit weird my md5 files becoming hash files but I totally get where you are coming from with that and I hope it catches on. Good luck!

Looks like JkR is another happy camper

I agree, it's awesome to see checksum finally out in the open. I bet cor is PROUD right now.

As a beta tester, I've been lucky enough to have checksum by my side for quite some time now. And over time, I sometimes forget about all the trouble I used to go through just to verify a couple of folders or recursively create hashes. checksum is just so simple, and well, it just "makes sense".

Why did it take so long for something like this to be created? That's something I'll always wonder. It's like all those other developers of hashing software just didn't care... I'm glad one of them did.

Thanks guys!

I actually started on a new feature minutes after I uploaded rc4; a request; WAV file alerts. Tonight, I also made a wee change to the installer's setup.ini (removed Explorer context menu "dividers"), and built an rc5. It's up now, in the same download place.

Keep the feedback coming, here and in my inbox, it's all good, even the bad! - though don't be insulting, cuz I'm a right c*nt when I'm annoyed!

It's about ready to be labeled v1.0, and promoted left, right and centre, I think; which will probably be at the weekend, when I'll have more time to trawl the popular download sites*.

Feel free to tell anyone and everyone about checksum, even torrent it at your favourite tracker, whatever; it's good, so let's get it out there!

for now..

;o)

When checksum appears for download on a popular download site, post it here so I can get it. If my download fails for whatever reason, corz.org blocks me from trying to download it again.

If you use a regular web browser, and don't try anything funky, there's absolutely no reason your download would fail. And if it does, email me, and I'll gladly send you a copy by hand.

How's that for service!

And by the way, the popular download sites will most likely just post a link back to here. Why wait? It's already here!

;o)

when you going to make a tshirt with your emoticon logo thingy?:

;o)

Great work. Thanks.

notnymous said..

;o)

It has crossed my mind, but my self-indulgence-o-meter is already in the red, so I'll probably at least wait until I get the first three designs ready. Also, it encourages "fans", and that was one of the reasons I got out of the music industry when I did!

I guess digital fans is okay; buying shirts, as opposed to ripping them off your back! It's a cool smiley, but, would YOU buy such a shirt?

;o)

ps. if I could find a manufacturer that could reproduce my site gradient on a T-Shirt; yes, I'd go for it, even just for myself.

I've been using a command line MD5 utility that does only files. But if a folder matches the filespec it attempts to access it and fails and quits. Inane behavior.

Oh, I've seen a lot crazier behaviour than that in hashing utilities! But let's forget about those, and look to the future: checksum.

;o)

Thanks a lot for writing this! I'd been using a command line md5 tool and was looking forward to point-and-click ease.

I deal with big (1GB - 13GB) video files that are typically moved around or backed up individually, so I always use the "individual checksums" option. I would like this to be the default. Unfortunately every time I right click on a directory and choose "Create checksums" I get one checksum file in the dir. The only way to get the "individual checksums" is to do the shift-key thing every single time, which gets old. Is there any way to have the "individual checksums" choice stick?

Also: Is there any way to be have an audio alert only when there is an error? The pc speaker beep quickly becomes very irritating.

Thanks ...

Hey, Mr. Snout, no problem!

checksum's operation is completely controlled by switches which makes it extremely flexible, so although there's no actual preference for "always create individual checksums" (I hadn't considered someone might want this set permanently, though I'll be sure to add this for a future release - cheers!), it's easy enough to achieve right now, simple add the i switch to your explorer context menu commands.

There are at least two ways to achieve this; probably the quickest is to add the i switch to the directory and/or drive commands in the registry..

HKEY_CLASSES_ROOT\Directory\shell\01.checksum

HKEY_CLASSES_ROOT\Drive\shell\01.checksum

Currently the switches in the command will be cr, so make them cri, and from then on, folder checksum commands will always create individual checksum files. Of course, if you occasionally want the usual per-folder checksums, you can do the SHIFT thing.

You could also create additional commands specifically for creating individual checksum files directly from the explorer menu in much the same way as the custom music file commands I outline in the tricks & tips page, here. You could even make the context menu entry specific to movie files, using crim(movies).

If you don't want to play with the registry, simply edit the installer's setup.ini file, adding the switches in there, instead..

HKCR\Directory\shell\01.|name|\command="|InstalledApp|" cri "%1"

HKCR\Drive\shell\01.|name|\command="|InstalledApp|" cri "%1"

and then reinstall checksum to get your updated Explorer context menu items. Actually, that's probably even quicker than editing the registry!

As for the audio alerts; if you want checksum to not beep on successful completion, yet still beep on any failures, simply set beep_success to a blank WAV file.

checksum will still alert you of failures with either a WAV or beep, depending on your preferences, but all success notifications will be completely silent. I've uploaded a small, blank WAV file for the purpose, here.

Of course, audio alerts can also be disabled completely in checksum.ini, if you wish.

Have fun!

;o)

To those who have read the page, and are now just following the comments..

There is a new section!

Check this out!

;o)

Very good. I also was thinking hashes should be done with point and click.

Thanks!

Hey cor. That's a nice tool - unfortunatelly it isn't what I'm searching for. Though it might be possible to make it such easily.

I'm actually looking for a tool with which I can easily check a file against a hash published on a web site. You too publish the hashes for your file here but to check the downloaded zip against it, I'd have to generate a hash file, open it, compare it against the one on the website, close the file and delete it.

So any way to generate and just display a hash to compare it visually? Or even better compare it automatically if a hash is found in the clipboard.

Thanks, chris

Hey Chris. I anticipated this need, and created "simple checksum", checksum's wee brother, which does exactly what you want. It's also very slim, and designed to sit out of the way somewhere, so you can do visual comparisons easily with your browser open - you can even make simple checksum semi-transparent, and leave it there.

As a bonus, you can also switch easily between MD5 and SHA1 (there's an application menu item and HotKeys for both) and simple checksum will recalculate the other algorithm for you, without the need for a second drag-and-drop. It comes free with checksum, just start it up!

Cheers, Laz3r. In fact, you pre-empted my new checksum slogan, there! Once the new beta goes up, I'll upload all the new pages, and you'll see what I mean.

;o)

Well cor, that surely works. But where to put that app to be invisible when not used but at hand when needed? Holding Ctrl while starting the app from context menu does something else than without but I'm not that sure what it is for. I still think it would be a nice behaviour to tell checksum to only display the result when a modifier key is pressed.

BTW, when a hash files content is verified, checksum tries to start cmd.exe. If I prevent that, everything still works. What ist the aim of this?

Nonono chris, simple checksum is a totally different application! If you ran the checksum installer, it should have been placed beside checksum.exe, in the program folder; it's the one with the green icon. Click this link; it's a page all about simple checksum.

There are no modifier keys for simple checksum. You just run it, and it stays open, displaying hashes for whatever you type into it, or drop onto it. You can toggle it (show/hide) by simply clicking its tray icon.

checksum doesn't display hashes, ever. checksum's job is to create and verify hash files.

But neither checksum or simple checksum attempt to start cmd.exe, at any time, for any reason; I suspect something else is happening on your system. Feel free to mail me more details about this if you like.

However, checksum will attempt to run compact.exe after creating logs, to compress them (more details in the itstory), and though compact.exe is a console application (it's built-in to windows) it should all happen in the background, and shouldn't be seen. If it's not happening in the background on your system, then I definitely want to know more about it; OS details, etc, in my inbox, or here, cheers!

;o)

Hi again!

I've been testing checksum on about 1 TB worth of files and it looks good so far. Easy to use and has several features. There are however a few features I miss which you can consider or just file under /dev/null

- Delete missing files from hash files

I have replaced several files with newer versions but the old files are still listed in the hash files which lists them as missing every time I do a verify. I can delete them manually from the hash files of course but where's the fun in that?

- Rename files to match filename in hash file or vice versa

Let's say a file has been renamed for some reason. Could be an irc server replacing spaces with underscores for instance. Or someone has renamed a file to match their own personal format.

The idea is to calculate the checksums for all files in a dir that isn't listed in the hash file(s) and see if it matches the checksum of any hash entries that are missing it's file and rename it accordingly.

Some considerations: Empty files should be ignored (and very small ones?). Multiple hash files can point to files in the same dir so it's probably a good idea to try to merge as many as possible to find the files that doesn't have a hash.

Another option that's much simpler to implement is to set it to only run on single hash files. It could then look for missing files in that hash file regardless if they're listed anywhere else.

I'm sure I can give you more suggestions if you're interested. :-)

Keep up the good work :-)

Yes, good work! I've just been testing checksum out on my archive, very impressed. It does lots of things I've wanted to do but couldn't until now. Now I just have to acquire a "decent text editor" (as you say!) so I can have a proper fiddle with all the preferences.

No one has mentioned simple checksum I see, it's very handy too. They make a good pair.

Thanks for all your work!

Excellent! Thank you.

I don't see where version 1.1b can be downloaded. Has it been released yet?

Thanks guys!

Cheers for the suggestions, Para Noid (and the other thing!). "Delete missing files from hash files" sounds useful, but highly risky; like you say, it wouldn't be the default! I'll definitely consider a special switch for this, though.

"Rename files to match filename in hash file or vice versa" I'll have to think about. If I did something like this, it would almost certainly work on a line-by-line basis, so the location of changed file names would be whatever scheme the original name was, regardless of relative or absolute paths. You can mix them all up real good in the latest version.

Setting this for only "single hash files" is is doable, so long as we remember, the idea of a single hash file is purely a virtual one, at least in verification (there is no "i" switch for verification, for this reason). A hash file is viewed simply as a container for hashes. Where each individual hash points, is determined on a line-by-line basis. I'll definitely consider all this, though.

notnymous, it's mostly done. Unfortunately, I've been away a lot this last week, and that will be true for a few days, yet, so the list is slow-going. I'm hoping to have 1.1b up this weekend, but that's not a promise!

Meantime, you could play with Batch Runner, a small but useful wee app I put up tonight; something I've been trying to make time for all week. I built it to run batches of tests on checksum before releases, but it's already proving handy for other jobs.

;o)

ps. Randy, also, their icon colour schemes compliment each other beautifully!

Nice. Thanks.

awesome!!

I got an error installing on Vista (SP1) about the zip.dll not getting registered. I got the folder out of the zip, and ran it again and it worked, but I thought you should know.

Great app, it was worth it!

Allthe best.

Bobz

Sorry about that, Bobz. It's actually sorta fixed, but y'all have to wait. For others who experience this, simply unzip the files.zip inside the "files" folder (yup, that's right, it will be /files/files/) and edit the setup.ini to read "files" instead of "files.zip". Continue as normal.

That x-zip plugin has been more trouble than its worth, and while working on corzipper (to come) I decided to bin it. I've now built a whole new zip plugin especially for my own stuff, which will be used for the installer, as well as some of my other apps (corzipper/backup/and more). As well as great performance, it boasts password-protected zip and unzip, which I utilize elsewhere.

x-zip will still be supported (my apps will look for my own zipper first, and fall-back to x-zip if it's not available) but I'll be phasing it out ASAP. As well as occasionally not registering itself, it uses available memory for all its operations, so while it's good for small things, installers and such, it starts to wobble as you increase the size of the archive. The new zipper plays nice with your RAM, and never use more than around 8MB to zip or unzip an archive of *any* size.

The new zip plugin is just one of a whole host of new stuff coming to the corz.org windows software area, soon. I also plan to install Vista myself this week - I hear SP1 makes Vista "not-crap", so here goes!

Probably all my stuff is full of Vista-related bugs, so if all goes to plan, I'll start squashing them in a few days.

l*rz..

;o)

Just wanted to add my support for Para Noids suggestion for dealing with renamed files. Would definitely save time as opposed to renaming them in the hash file since a lot of the time I will hash files as soon as possible when i get them and only worry about renaming them later.

Totally awesome program though! Was exactly what I was looking for. Its amazing how far behind most of the other programs out there are.

Thanks Cor

PS: noticed you are hashing "Van Diemans Land" in the other screenshot. I live in what used to called Van Diemans Land!!!

Hi Cor,

Your software looks like what i've been looking for, although I'd like to confirm a few things.

I've used a few crc/md5/sha file checkers that will do just that, ie compare a single file to a published MD5 value et.

However, I'm after a program where I can say create an MD5 of the sum total of folders with hundreds of files (or even a volume with hundreds of folders and thousands of files etc.

After there being some problems in the past with external hard drive (usb) chipsets vs file sizes etc. Plus where any data is being moved say from your PC to Flash Memory, DVD media or an external hard drive, I would find it useful to check if the files are an exact copy. I don't need to have an MD5 created for each file, but simply if I copy and paste a whole Volume (bar hidden system files etc) can i use your program to confirm if the 100Gbytes of data was copied to it's destination successfully?

Thanks.

Fated to use checksum!

Thanks Jeff; I'll definitely be looking into the renamed files thing during my next code block; though it will most likely be a special function, quite removed from the regular create and verify processes. Reason being, during verification, checksum doesn't care what files are in the folder, doesn't even look; its only concern is files listed in the hash file, so checking for renamed files could potentially introduce some overhead which I wouldn't want affecting regular (super-fast) usage. Perhaps a "refresh hash file" function. Hmmm.

The way I usually do it, currently, is to verify the hashes, then make changes, renaming, ID3 tags, etc, then write a completely fresh hash. It only takes moments with checksum. Still, a refresh function would be handy.

Vorlon checksum has no problem handling whole volumes with hundreds of Gigabytes, even Terrabytes of data (I recently received a gushing thank-you mail about checksum's brisk work on a 5TB archive, so I can say that with certainty!), and if you wish, you can put all the hashes into one single "root" hash file (again, checksum can handle massive root hash files). Though initially dubious of the usefulness of root hash files, I do find myself using them more and more myself these days, particularly in cross-platform work.

Also, checksum will happily hash hidden files, system files, even locked and in-use files, so you can be 100% certain that the volume was copied exactly. Or not, as the case may be!

Give it a whirl!

;o)

ps. here's a tip.. I note that someone mentioned (either here, earlier, or in a mail) that they found reading two hashes (to compare them) a choreful process; here's how I do it.. Paste hash one into an editor, then select all and paste hash two over the top of it. If you then Undo and Redo you can see any variations at-a-glance. I think most people just read the first and last few characters, anyway!

In the future, simple checksum will do this automatically, but that's for in the meantime!

Hi Cor,

Is it possible to do retrospective checking on read only memory storage ie previously burnt cdr/dvdr media with checksum?

Scenario:

Say you have already burnt an archive DVD-R of your drivers and apps etc prior to having installed or even known about checksum, but the directory/file structure still remains exactly as it was on your hard drive volume when you did the copy.

Could you then install checksum to your system and then hash that original source volume/drive/folder/file structure etc, then afterwards use checksum to verify the DVD-R but use the *.hash files of original source (on the Hard drive) as the MD5 reference of the files on the DVD-R?

Basically does checksum need a *.hash file/s on the media it's checking in all cases or can it do it "remotely" (so to speak)?

Best regards,

Steve

Yes Steve, checksum's operation on read-only volumes has received quite a bit of attention, internally, and there are a number of ways to achieve what you want.

Probably the easiest method in your situation, is to work in reverse, that is; create a "root" checksum of the read-only volume, and then simply copy that to the original location on your hard drive, and click it. You're done.

Note, checksum won't create a root hash file by default; you can either use the one-shot options to set that, add the "1" switch manually (if working from the command-line), or if you do this sort of thing a lot, you might want to set checksum's read-only "fallback_level" to 1, in checksum.ini.

There's more information about checksum's various read-only fallback strategies, here.

I should add, you can also create and verify hashes containing absolute paths, for truly "remote" hashing, as you put it. This can be highly useful, but could be limiting if some fixed volume got a letter change in the future, or was moved to a different machine where its original drive letter was not available.

However, in a future version, I plan to add path "mappings" where absolute hash files can later be transparently remapped to new locations. It's one of those features that would probably be rarely used, but occasionally extremely handy; at least, it would be useful to those not familiar with regex search and replace in their text editors!

Anyway, that's for the future; what you want is doable right now, with a simple root hash.

;o)

Hey cor,

thanks for your reply.

Tasmania, Australia was first originally named Van Diemens Land.

Hashing is a great way to track file changes but is there any way to safegaurd against the hash files getting corrupted?

cheers

Jeff

Tasmania! What a beautiful word that is.

Guard against corrupt hash files? There are a few ways. You could start by making a backup of the hash files. It's fairly easy to create an archive of only the hash files, even from a volume of many individual hash files. When decompressed in-situ, all the hash files would drop back into the correct locations. Also, hash the archive!

Then there's absolute (aka 'remote') hashing, which I discussed in my previous post. You could keep the hash files somewhere else, and still hash the original files as if they were right next to the hash files. The hash file itself could live on some solid-state, read-only volume.

For the extremely cautious, after hashing the volume as normal, you could temporarily remove .hash (or .md5/.sha1 if you use those extensions) from checksum's ignore list, and do a root hash of *only* the hash files, using absolute paths, and store that hash file somewhere safe. Before checking the file hashes, you would check the hash hashes!

And don't forget double-hashing! You can have a sha1 AND md5 hash for each file, inside the same hash file, if required. It would be extremely unlikely, if corruption is the potential issue, for both hashes to become corrupted.