checksum tricks and tips

hints, secrets, behaviours, assumptions and more..

Get the most from your hashing!

checksum represents a whole new way of working with hashes. This page aims to help you get the most out of the experience, wherever you're at..

![]()

- Absolute beginners

- Basic checksum tasks

- Launch modifiers

- Batch Processing

- Automatic Music playlists

- Custom Explorer commands

- Creating "quiet" checksums

- Cross-platform hashing

- In your SendTo menu

- Compare two Folders or Disks

- Compare two CDs

- Compare a disk with the original .iso

- Installer Watch (& upgrade helper)

- Command-line switches

- Preferences

- How do I..

Absolute beginners..

The basics: checksum creates "hash files". A hash file is a simple, plain text file containing one or more file hashes, aka. "checksums". Hashes are small strings which uniquely represent the data that was hashed. e.g..

cf88430390b98416d1fb415baa494dce *08. Allow Your Mind To Wander.mp3

(Mike Mainieri - Journey Thru An Electric Tube [1968] - I have the vinyl)

If you want to know more about the algorithms that checksum uses to hash files (MD5, SHA1 and BLAKE2), see here.

Once these hashes have been created for a particular file or folder (or disk), you have a snapshot that can be used, at any time in the future, to verify that not one bit of data has changed. And I do mean a "bit"; even the slightest change in the data will, thanks to the avalanche effect, produce a wildly different hash, which is what makes these algorithms so good for data verification.

The basic checksum tasks..

Most people will simply install checksum, and then use the Explorer context (right-click) menu to create and verify checksums, rarely needing any of the "extra" functionality that lurks beneath checksum's simple exterior. After all, checksum is designed to save you time, as well as aid peace of mind. This is how I mostly use it, too..

Create checksums..

Right-click a file, the checksum option produces a hash file (aka. 'checksum file') with the same name as the file you clicked, except with a .hash extension (or .md5/.sha1, if you use those, instead). So a checksum of some-movie.avi would be created, named some-movie.hash (if you don't use the unified .hash extension, your file would instead be named some-movie.md5 or some-movie.sha1, depending on the algorithm used).

Right-click a folder, the Create checksums.. option will produce a hash file in that folder, containing checksums for all the files in the folder (and so on, inside any interior folders), named after the folder(s), again, with a .hash extension, e.g. somefolder.hash

Verify checksums..

Click (left-click) a hash file (or right-click and choose Verify this checksum file..), checksum immediately verifies all the hashes (.hash/.md5/.sha1) contained within.

Right-click a folder, the Verify checksums.. option instructs checksum to scan the directory and immediately verify any hash files contained within.

That's about it, and this simple usage is fine for most situation. But occasionally we need more..

checksum launch modifiers..

When you launch checksum, you can modify its default behaviour in two important ways.

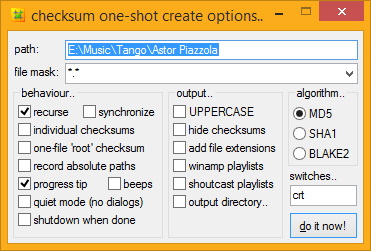

The first modifier is the <SHIFT> key. Hold it down when checksum launches, and you pop-up the one-shot options dialog, which enables you to change lots of other things about what checksum does next. This works with both create and verify tasks, from explorer menus or drag-and-drop. Here's what the one-shot create dialog looks like..

In there, as you can see, you can set all sorts of things. Hover over any control to get a Help ToolTip (you might need to repeat that to read the entire tip!). You can also drag files and folders directly onto that dialog, if you want to alter the path setting without typing. Same for the verify options.

The file mask: input is, by default, *.*, which means "All files", "*" being a wildcard, which matches any number of any characters. You can have multiple types, too, separated by commas. For example, if you wanted to hash only PNG files, you would use *.png; if you wanted to hash only text files beginning with "2008", you could use 2008*.txt, and so on.

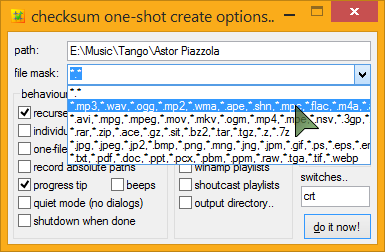

If you click the drop-down button to the right of the input, you can access your pre-defined file groups, ready-for-use (you can easily add to/edit these in your checksum.ini)..

NOTE: Normally one drops folders into checksum's create options (path input). If you drop a file onto the create options, the path is inserted into the path: input, and the file name is added to the mask input - it is also inserted into the drop-down lost in case you need to get back to it.

If you drop multiple files into the create options, checksum will create a custom file mask from your selection. For example, if you dropped these three files; "hasher.jpg", ".txt" and "security.pdf", checksum would create the mask: "*.jpg,*.txt,*.pdf".

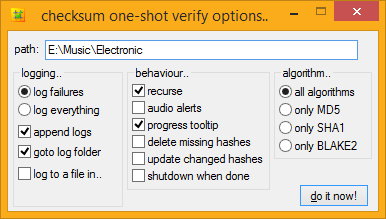

Here is what the one-shot verify options dialog looks like..

The second modifier is the <Ctrl> key. Hold it down when checksum launches and you force checksum into verify mode, that is to say, no matter what type of file it was, you instruct checksum to treat it as a hash file, and verify it. This works with drag-and-drop too, onto checksum itself, or a shortcut to checksum. checksum's default drag-and-drop action is to create hashes.

Amongst other things, this is useful for verifying folders in portable mode, simply Ctrl+drag-and-drop the folder directly onto checksum (or a shortcut to it), and all its hashes will be immediately verified.

batch processing..

hashDROP

hashDROP

A batch-processing front-end for checksum..

Because checksum can be controlled by command-line switches, it's possible to create all sorts of interesting front-ends for it. The first of these to come to my attention, is a neat wee application called "hashDROP", which enables you to run big batches of jobs through checksum, using a single set of customizable command-line switches.

As developer seVen explains on the hashDROP page..

Actually, that looks to be down right now. I've put a temporary copy of the page and download here.

On seVen's desktop, at least, it looks something like this..

hashDROP has already earned a place in my SendTo menu. Good work, seVen! For more information, documentation, and downloads, visit the (temporary) hashDROP page.

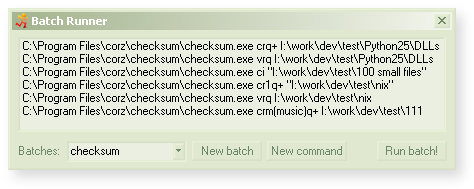

Batch Runner

Batch Runner

Run multiple programs in a batch..

I originally designed Batch Runner to run a big batch of tests on checksum before release, but it has since proven useful for other tasks, so I spruced it up a bit, made it available.

If you want to run loads of hashing jobs using the same switches, hashDROP is probably more useful to you. But if you want to run lots of checksum jobs with different switches, or as part of a larger batch of jobs involving other programs, then check out Batch Runner.

Batches can be saved, selected from a drop-down, run from the command-line, even from inside other batches, so it's handy for repetitive scheduled tasks, or application test suites, as well as general batch duties. At least on my desktop, it looks like this..

Automatic Music playlists..

Perhaps checksum's second most common extra usage is making music playlists. After you have ripped an album, you will most likely want a playlist along with your checksums, so why not do both at once? checksum can.

Right-click a folder and SHIFT-Select the checksum option (which pops up the one-shot options dialog), check either the Winamp playlists (.m3u/.m3u8) or shoutcast playlists (.pls) option, and then do it now! You're done.

By default, checksum will also recurse (dig) into other folders inside the root (top) folder. Now you've got music playlists that you can click to play the whole album in your media player.

Note that checksum will thoughtfully switch your file masks to your current music group when you select a playlists option, reckoning that you'll probably only want to actually hash the music files, not associated images, info files and such, but it's easy enough to switch it back to *.* (hash all files) if you need that. The rationale behind this being that it's what most people want, so the majority get the simpler, two-click task.

If you do this sort of thing a lot, check out the next section, for how to put this functionality directly into your Explorer context menu, and skip the dialog altogether..

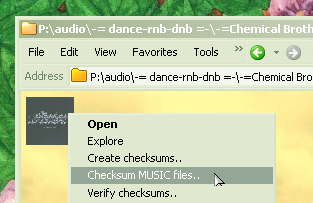

Custom Explorer Context Menu Commands made easy..

On the subject of music files, you may encounter a lot of these, and fancy creating a custom explorer right-click command along the lines of "checksum all music files", or something like that. No problem; you can simply create a new command in the registry, add the "m" switch add your file masks, right?

But what if you change your file masks? Perhaps add a new music file type? Do you have to go and change your registry again? NO! checksum has it covered. Instead of specifying individual file masks, use your group name in the command, e.g. m(music) and checksum applies all the file masks from that group automatically, so your concept command is always up-to-date with your latest preferences.

Here's an example registry command that would do exactly that. Copy and paste into an empty plain text file, save as something.reg, and merge it into your registry. If you installed checksum in a different location, edit the path to checksum before you merge it into the registry (not forgetting to escape all path backslashes - in other words, double them)..

Windows Registry Editor Version 5.00 [HKEY_CLASSES_ROOT\Directory\shell\01b.checksum music] @="Checksum &MUSIC files.." [HKEY_CLASSES_ROOT\Directory\shell\01b.checksum music\command] @="\"C:\\Program Files\\corz\\checksum\\checksum.exe\" crm(music) \"%1\""

NOTE! If you add a "3" to the switches [i.e. make them c3rm(music)] you'll get a media player album playlist files created automatically along with the checksum files. Groovy! Here's one I prepared earlier.

Setting new default Explorer context actions..

You can also change checksum's default Explorer commands, as well as add new commands, without going anywhere near the registry. Simply edit the installer's setup.ini file, [keys] section. For example, to always bring up the one-shot options dialog when creating checksums on folders and drives, you would add an "o" to those two commands..

HKCR\Directory\shell\01.|name|\command="|InstalledApp|" cor "%1"

Then run checksum's installer (setup.exe), and install/reinstall checksum with the new options. From then on, any time you select the "Create checksums.." Explorer context menu item, you will get the one-shot options dialog. If you would prefer to synchronize hashes under all circumstances, add a

HKCR\Drive\shell\01.|name|\command="|InstalledApp|" cor "%1"y, and so on.

Creating checksums "quietly"..

If you want to script or schedule your hashing tasks, you will probably want checksum to run without any dialogs, notifications and so forth. If so, add the Quiet switch.. q

When the q options is used alone, if checksum encounters existing hash files, it continues as if you had clicked "No to All", in the "checksum file exists!" dialog, so no existing files are altered in any way. This is the safest default.

If you would prefer checksum to act as if you had clicked "Yes to all", instead, use q+, and any existing checksums will be overwritten.

If you want synchronization, add a y switch (it can be anywhere in the switches, so long as it's in there somewhere, but qy is just fine)

Quiet operation also works for verification, failures are logged, as usual. Like most of checksum's command-line switches, these behaviours can be set permanently, in your checksum.ini.

Working with Cross-Platform hashes..

checksum has a number of features designed to make your cross-platform, inter-networking life a bit easier.

You don't have to do anything special to verify hash files created on Linux, UNIX, Solaris, Mac, or indeed any other major computing platform; checksum can handle these out-of-the-box.

If you need to create hash files for use on other platform, perhaps with some particular system file verification tool, checksum has a few preferences which might help..

You will perhaps appreciate checksum's plain text ini file (checksum.ini) containing all the permanent preferences. Inside there you can set not only which Line Feeds checksum uses in its files (Windows, UNIX, or Mac), but also enable UTF-8 files, single-file "root" hashing, generic hash file naming, UNIX file paths, and more. Lob checksum.ini into your favourite text editor and have a scroll.

checksum in your SendTo menu..

There are a number of ways to run checksum. One handy way, especially if you are running checksum in portable mode without Explorer menus, is to keep a shortcut to checksum in the SendTo menu.

Simply put; any regular file or folder sent to checksum will be immediately hashed. Send a checksum file (.hash, .md5, .sha1, plus whatever UNIX hash files you have set), and it will be immediately verified. If you want extra options, hold down the <SHIFT> key, as usual.

If you want to send a non-checksum file, but have checksum treat it as a checksum file, hold down the <Ctrl> key during launch, to force checksum into verify mode (either just after you activate the SendTo item, or perhaps easier; hold down <SHIFT> AND <Ctrl> together while you click, to bring up the one-shot verify options). This is also handy for verifying folder hashes.

How to accurately compare two folders or disks.

This is an easy one. First, create a "root" hash in the root of the first (source) folder/disk, then copy the .hash file over to the second (target) folder and click it.

That's it!

For situations where you don't need a permanent record of the hashes, you can fully automate the process of comparing two folders with simple checksum.

How to accurately compare two CDs, DVDs, etc.

(even when they don't have hash files on them)..

When hashing read-only media, obviously we cant store the hash files on the disk itself. However, thanks to checksum's range of intelligent read-only fall-back strategies, you can make light work of comparing read-only disks with super-accurate MD5 or SHA1 hashes, even if those disk were burned without hashes.

All we need to do, is ask checksum to create a "root" hash file of the original disk, using the "Absolute paths" option. This will produce a hash file containing hashes for the entire disk, with full, absolute paths, e.g..

531a3ce6b631bb0048508d872fb1d72f *D:\Sweet.rar

558e40b6996e8a35db668011394cb390 *D:\Backups\Efficient.cab

832e98561d3fe5464b45ce67d4007c11 *D:\Sales Reports\April.zip

There are a few ways to achieve this. For one-off jobs, you can simply add k1 to your usual command-line switches. For example, to create a recursive root hash file of a disk, with absolute paths, you would use crk1.

Another way, is to set (and forget) checksum's fallback_level preference to 2, inside checksum.ini..

fallback_level=2

With fallback_level=2, when checksum is asked to create hashes of a read-only volume, it will fall-back to creating a single "root" hash with absolute paths, inside your fall-back location (also configurable), which is exactly what we need!

Then in the future, to verify the original disk, or copies of the disk; you simply insert it, and click the hash file.

You can store the .hash file anywhere you like; so long as the disk is always at D:\, or whatever drive letter you used to create the .hash file originally, it will continue to function perfectly.

If you want to know more about checksum's read-only fall-back strategies, see here.

Or accurately compare a burned disk to its original .iso hashes..

If you have a .hash file of the original .iso file, in theory, a future rip of the disk to ISO format, should produce an .iso file with the exact same checksum as the original. My burner is getting old, but I needed to know, and so tested the theory.

I Torrented an .iso file of a DVD (Linux Distro) - the hashes were published onsite, checksum verified these were correct. I burned the disk to a blank DVD-R, and then deleted the original .iso file. Everything is now on the disk only. The .hash file is still on my desktop.

Then I used the ever-wonderful ImgBurn, to read the DVD to a temporary .iso file on my desktop.

Fortunately, the .iso file, and the original .iso file had the same name, so I didn't need to edit the .hash file in any way. Then the moment of truth. I click the .hash file, and checksum spins into action, verifying. A few seconds later... Beep-Beep! No Errors! It's a perfect match!

I can't speak for other software, but with ImgBurn at least, a burned disk can produce an .iso file with a hash bit-perfectly identical to that of the original .iso file used to create the disk, and can be relied upon for data verification. Good to know.

checksum as an installer watcher..

Because checksum can so accurately inform you of changes in files, it can function as an excellent ad-hoc installer watcher. All you do is create a root checksum for the area you would like to watch. Run the installer. And afterwards, verify the checksum. If anything has changed, checksum will let you know about it, with the option to log the list to funky XHTML or plain text.

checksum can be utilized in any situation where you need to know about changed files. You can even use it to compare registry files, one exported before, the other after. If the hashes match, there's no need to investigate further.

checksum as an upgrade helper..

I have more than once used checksum to help me with upgrades. Imagine the scenario.. Your local zwamp installation keeps bugging you to update. But you put it off because you modified a few files but don't remember exactly which files. No need! Let checksum take care of it..

Hash the installation folder, using a single-file "root" hash.

Copy the .hash file to the root (same folder) of a fresh copy of zwamp and click it.

Voila! A list of modified files. Now upgrading is easy.

checksum's custom command-line switches..

Click & Go! is the usual way to operate checksum; but checksum also contains a lot of special functionality, accessed by the use of "switches"; meaningful letter combinations which instruct checksum to alter its operation in some way.

If you have some unusual task to accomplish, the one-shot options dialog enables you to manipulate the most common switches with simple checkbox controls. You can see the current switches in a readout, updating dynamically as you check and uncheck each option. But this output is also an input, where you can manipulate the switches directly, if you wish. If that is the case, you will probably find the following reference useful.

You may also find this section useful if you are constructing a full checksum command-line for some reason, maybe a Batch Runner command or batch script, or custom front-end for checksum, or altering your explorer context menu, or creating a scheduled task, or uTorrent finished command*, or some Übertask for your Windows Run command (Win+R) or something else. In each case, switches are placed before the file/folder path, for example; the full command-line to verify a checksum file might look like this..

C:\Program Files\corz\checksum\checksum.exe v C:\path\to\file.hash

Here are all the currently available switches:

cCreate checksums.vVerify checksums.rRecurse (only for directories).ySynchronize (add any new file hashes to existing checksum files).iDuring creation: create individual hash files (one per file).

During verification: performs hash search for individual file (see examples, below).

sCreate SHA1 checksums (default is to create MD5 checksums).2Create BLAKE2 checksums (default is to create MD5 checksums).uUPPERECASE hashes (default is lowercase).mDuring Creation: File masks. Put these in brackets after the m. e.g..

m(*.avi,*.rm)

Note: You can use your file groups here, e.g.m(music)During verification: Check Only Modified Files.

Perform operations only on files with a modified timestamp more recent than their recorded timestamp. This is generally used along with the "w" switch, to update the hash and timestamp of a file or set of files you have mindfully changed, whilst skipping bit-checking all other files, potentially saving a lot of disk access and massive amounts of time, especially over network links.

Note: This feature is currently marked as "experimental".

jCustom hash name (think: "John"!). Put this in brackets after the j. e.g..j(my-hashes)dDuring Creation: Output Directory. Put this in brackets after the

d. e.g..d(C:\hashes)

NOTE: Make this the last bracketed switch on your command-line, i.e.m(..)j(..)d(..).During verification: Override Root Directory. *ßeta Only

Using the

dswitch, you can specify a new root directory for relative hash files, enabling them to be checked outside their original location.For example, if you created a relative

.hashfile for files in "D:\my files" and put the hashes in a folder, "e:\my hashes" using a command-line something like..

crd("e:\my hashes") "D:\my files"You can now verify this

.hashfile ("e:\my hashes\my files.hash") IN-PLACE using similar syntax:

vrd("D:\my files") "e:\my hashes"eAdd file extensions to checksum file name (for individual file hashes)..1Create one-file "root" checksums, like Linux CD's often have.

3Create .m3u/.m3u8 playlists for all music files encountered (only for folder hashing)..pCreate .pls playlists for all music files encountered (only for folder hashing)..qQuiet operation, no dialogs (for scripting checksum - see help for other options)..hHide checksum files (equivalent to 'attrib +h').

oOne-shot Options. Brings up a dialog where you can select extra options for a job.

(to pop up the options at run-time, hold down the <SHIFT> key at launch)bBeeps. Enable audio alerts (PC speaker beeps or WAV files).

tToolTip. Enable the progress ToolTip windoid.

nNo Pause. Normally checksum pauses on completion so you can see the status. This disables it.

(note: you can also set the length of the pause, in your prefs)kAbsolute Paths. Record the absolute path inside the (root) checksum file.

Use this only if you are ABSOLUTELY sure the drive letter isn't going to change in the future..fLog to a file

(if there are failures, checksum always gives you the option to log them to a file)gGo to errors.

If a log was created; e.g. there were errors; open the log folder on task completion.lLog everything.

(the default is to only log failures, if any).wUpdate changed hashes. (think: reWrite)

(during verification, hashes and timestamps for "CHANGED" files can be updated in your .hash file).USE WITH CAUTION ON VOLUMES YOU KNOW TO BE GOOD!

xDuring creation: used to specify ignored directories, using standard file masks.

e.g.checksum.exe cr1x(foo*,*bar,baz*qux) "D:\MyDir"During verification: delete missing hashes.

(hashes for "MISSING" files are removed from your .hash file).aOnly verify these checksum files.

(followed by algorithm letter:amfor MD5,asfor SHA1,a2for BLAKE2 - see example below).zShutdown when done.

Handy for long operations on desktop systems. A dialog will appear for 60 seconds, enabling you to abort the process, if required

The 'a', 'f', 'g', 'l' and 'w' switches take effect when verifying hashes.

The '1', '2', '3', 'e', 'h', 'j', 'k', 'm', 'p', 's', 'u', and 'y' switches take effect when creating hashes.

The 'd', 'i' and 'x' switches have different functions for creation and verification.

In other words..

global switches =b, n, o, q, r, t, z.

creation switches =1, 2, 3, c, d, e, h, i, j, k, m, p, s, u, x, y.

verify switches =a(m/s/2), d, f, g, i, l, v, w, x.

Switches can be combined, like this..

… checksum.exe v "C:\my long path\to\files.md5"… checksum.exe crim(movies) c:\downloads… checksum.exe vas c:\archives

… checksum.exe c3rm(music) p:\audio

… checksum.exe cr1m(*.zip) d:\

… checksum.exe vi d:\path\to\some\video file.avi

"d:\path\to\some\video file.avi" and verify that one file ]

… checksum.exe crkq1m(movies)j(video-hashes)d(@desktop) v:\

video-hashes.hash") for all movie files on drive V: and place it on the desktop ]

note: Although it won't appear in the options dialog, the custom name, "video-hashes", will still be set when you begin the job.

"C:\Program Files\corz\checksum\checksum.exe" cq "%D\%F"

notes:

The order of the switches isn't important, though the "

m" switch must always be immediately followed by the file masks (in brackets), e.g.m(*.avi)(same withd(dir),j(name)andx(file*mask)switches), and the "a" switch must be the first letter of a two letter combination, e.g.amThe

d()(output directory/root directoy) switch must be the last (bracketed) switch on your command-line. If you are using custom hash file name and/or custom file groups, put those first, e.g.."c:\path to\checksum.exe" cr1qnm(movies)j(my-hashes)d("c:\some (dir) here") "D:\My Movies"..which would quietly create a root hash file for all the movie files in

D:\My Movies, and put those hashes in "c:\some (dir) here\my-hashes.hash". Those tricky braces inside the example path are why it goes last.You don't need to specify the

m(music)group switch to create playlists, only the3. A command likechecksum.exe rc3 "P:\audio"would create checksums recursively for all files in the pathp:\audio, whilst creating playlists for only the music file types. Nifty, huh?Most of these switches also have a preference inside

checksum.ini. If that preference is enabled, you can disable it temporarily by prepending the switch with a-(minus) character, e.g. to disable the Progress ToolTip, use-tAny of these switches can be easily added permanently to your Explorer right-click (context) menus. For instance, you may like to always use the one-shot options, without having to hold the SHIFT key every time. So simply add an

o, and it will be so. See here for details of how to permanently alter checksum's Explorer context menu commands.

And remember, if there's some specific behaviour that you want set permanently, you can do that, and a lot more, inside checksum.ini..

checksum.ini

working with checksum's UNIX-style preference file..

checksum has a lot of available options. Here is a page that will help you get the most out of them.

I do requests!

If there's something you would like to accomplish with checksum, but don't think think checksum can; feel free to request a feature, below..

Request a feature!

If you think you have found a bug, click here. If you want to suggest a feature, leave a comment below. For anything else, click here.

Hi there again. I have a request for your next version. Allow checksums to be verified against another directory. Scenario: I copied a large amount of files from a network share, I've hashed my local files, now I want to compare the hashes to the network share to verify my download. Currently a user has to copy the HASH file to the network location, or hash the network files and copy the HASH file to the local directory. Keep up the great work!

This is a Beautiful Site.

Hi again, wraithdu. I'm looking at your post wondering what might be easier than simply copying the hash file over to the new location. It's a single-click and drag operation! If you can think of something easier, let me know, and I'll definitely consider it.

Cheers, Tuwase. People keep telling me this, but no one ever says, "Gee, Cor, your site design is so pleasing to my eyes, I'd like to donate you a hundred bucks!", sadly.

I think I'll put up a "comment thing" just for general stuff. Maybe right on the front page. That would be novel. Hmm..

;o)

I would like to congratulate you on a very fine tool. Unfortunately for me, I seem to have hit a snag when using it with my NAS device. My OS is Vista x64 and I am using wifi (usually) to connect to my storage device. For very large files (say 10+GB but the threshold could be lower) checksum will run to completion and claim that hashing has completed at which point I lose all network connectivity on my PC (to the internet, the NAS device, and presumably anything else on the network).

Any idea what might be going on here?

Very strange. It sounds similar to troubles I used to have on my own network, transferring large files. If I remember correctly, a driver update on my network card fixed it.

It doesn't sound like a checksum error, as such, more to do with your network throughput. I'd wager that any application that tries to pull that same file over the network in one go would invoke a similar situation (mine used to crap out pulling Apache log files into my text editor, amongst other things). Though without more information, that could just be wishful thinking on my part. Have you tried another hashing application on the file(s)? Try something similarly fast, like fastsum; see what happens.

I'd like to have a lot more information about the errors you are receiving, before I consider it further. Does your system, or any other applications give some specific error messages? Can I see those? If there are none, try and do some ftp over the link and see what your ftp client says (often ftp clients give rather good network error messages). I'm assuming your NAS has an ftp server on-board. At any rate, more information is always better than less.

Also, has the hashing actually completed? In other words, is there a hash file at the end of the process? Or did that also fail?

Thanks for the compliment, though.

;o)

I dug in to this a little more today and was able to resolve my problem. It was, indeed, a driver issue with my wireless card. I use an Intel wireless/PRO 5100 AGN. A simple driver update via device manager has made all my issues go away.

I did run an ftp test and it failed in a similar fashion (prior to my fix). Additionally, checksum was able to create a hash file, but it was of zero length. Fastsum, strangely enough, didn't suffer from this problem. It worked before I applied the driver update. I'm not entirely sure why that is. I suspect that it is a function of faster run time. Fastsum is limited to md5 and I prefer using sha1. I'm not sure if that incurs a runtime penalty with your program or not but my statistically insignificant tests indicate that Fastsum completed its md5 hashing ~10 minutes faster than checksum could complete sha1 hashing. That leaves about a 10 minute difference. That doesn't exactly leap out at me hugely significant (82 minutes vs. 73 minutes...) but it's hard to say. Anyhow, it's a moot issue now.

While I have your attention, though, might I suggest one possible change? I really like your "status bar" windoid. It is both functional and lightweight. Two properties I really appreciate. I think there is an opportunity to make it even more informative, however. During these especially long runtimes I must admit that I really appreciate a visual indicator of progress. Rather than making a clunky little progress bar window, how about instead make the windoid itself a progress bar with text overlay? I think this adds a little more information relayed by checksum without doing violence to the spirit of your design.

Aha! Yes, it looked like a driver issue. I'm glad you got it sorted, you will probably find lots of network things work better.

As for the hashing speeds, considering that SHA1 hashing speeds are very much slower than MD5 hashing speeds, that's actually a great result for checksum! Think about it. Actually, if you really think about it, it's clear the bottle-neck isn't the so much the speed of the hash calculation, as the network link. I'm curious how long checksum would take to do an MD5 of that same folder.

As for your suggestion, do you mean long operations with many files, or long operations on a single file. If it's the former, then this might be doable, though could potentially make it tricky to see all the information (though I know some thought on that would fix it). If it's the latter, it would require completely re-coding my hashing engine, which is designed to just "get on with the job", without interruption, not handing back control until the task is complete. This kind of change is unlikely to be considered at this time.

I'm thinking about it, though. It's a nice idea.

;o)

Ok, just for fun I ran checksum using md5. I'm afraid the result was less than illuminating: 87.9 minutes. These are very crude measurements as they completely disregard network traffic or the I/O load on the NAS device. However, I will say that in all cases I limited held my personal use of the device to zero, but that doesn't rule out background services, daemons, etc. Probably a much better test would be to copy the file to my local disk and run checksum against it multiple times using both algorithms.

Anyhow, I know you are right about the network I/O being the limiting factor because I attained much faster run times when the file was located on an external HD attached via USB.

RE: the suggested windoid change, I really just meant anytime there was very long delay in feedback given by the windoid, I found myself checking task manager for progress information. I think when you have the case of many files of short length, the user gets a fairly reliable indication of progress from the count of files thus-far processed. The shorter then avg. file length and the smaller the standard deviation from the average the more reliable an indicator this count is. If the change would cause a noticeable degradation in performance then I wouldn't want it as I have other means of tracking program performance (task manager's I/O columns work fairly well for this). I suppose if it were me (and I have no idea how you coded your engine) I'd use a separate thread for the windoid and then occasionally pass it progress info from the engine thread.

Even so, forgetting all of that and just turning your processed file counter into a visual color gradient would have utility.

Thanks, again for your helpful advice about the driver. This is a brand-new laptop, and I am still in the break-in phase.

Apologies for the delay in replying; it's been crazy here, this must have slipped through.

Well, the times tell us one thing; test results on that system are not reliable! Interestingly, someone on the main page was commenting about checksumming over networks, and I had to admit that it's not something I saw checksum doing a lot, and didn't make any special consideration for over-network use - I was far more interested in being able to work with all the different formats of cross-platform hash files. All my (and my beta tester's) network tests passed without issue, I should add.

checksum is designed to load the data from the file system as fast as possible, and there probably are network setups where this could, if not overwhelm, then at least stress the networking components. Networking is always one of those areas where the mileage variables are stretched to their limits, and while there should theoretically be no issues hashing data over any network link; in practice, networks can contain many weak links, and I'd be more inclined to see checksum's behaviour as an indicator that other issues need attention. In other words, in an ideal network, there's no problem.

At any rate, checksum was designed to work with hashes from any platform, the idea being that file hashes are always created and verified locally, using whatever tools are locally available. Hashing over a network, if you think about it, is kinda backward, because the operating system will, at some point, need to pull that data over the network; to work with it; and it's AFTER that process that its integrity might need checking. The OS on the other box, that is the one best suited to checking its local hashes.

NAS sits squarely in the middle of all this, and perhaps demands special attention. I'll definitely be looking into this, checksum sensing over-network use, and perhaps altering its data reading behaviour slightly. Thanks for the heads-up.

As far as the progress bar goes, yes, I hear exactly what you're saying. My take on it is that verifying hashes is something that the computer does, not something *I* do. That there's a progress windoid at all, is an indicator of how far I'm prepared to compromise my integrity to produce usable software!

Maybe in a future version. Once checksum has a million users and a team of coders!

;o)

I just want to say how much I love your program. I'm a bit paranoid sometimes, so this tool really helps. Also, I've been working on a cryptology project and am proud to include an example in it using your program. If I had the ability to, I would definitely donate (and buy a shirt; I already have plenty for now, sorry).

I love all of the information about the tool on your site. It's pretty simple doing regular things, but it just makes me giddy seeing all of the capabilities that this amazing freewa--ahem, shirtware has to offer. I hope to see more amazing tools. You've done a great job!

High praise indeed! Thanks!

Your wish is my command.. Believe it or not, I am, at this very moment, putting the finishing touches to not one but THREE brand new Windows apps!

Although not released yet, their in-progress pages do exist, if you know where to look.

And hey! I'm proud to be a part of your cryptology project!

;o)

Lots of capability in this proggy!

Unfortunately it is a little verbose. It took me a half hour just undoing the commenting in the checksums.ini file so my editor could properly line wrap them. Unfortunately just about every especial case was exercised, that is why it took so long - very tedious.

\

When i was done there was no way to do what i wanted;-( To my way of thinking that option is obvious: what is the checksum for the ENTIRE directory?

To work around to it i had to:

1) create a file of all checksums recursively in a directory. (with a single file, don't know why this is not the DEFAULT)

2) rename the file from .hash so checksum would not ignore it

3) create a (sub)directory for each directory to be compared

4) hash the (sub)directories in 3)

5) finally do the comparisons

An option for a simple summary checksum would obviate this convoluted workaround...

lol! what you wanted to do is built-in!

gheil, It sounds like you are trying to use checksum as a file-compare utility. It isn't a file compare utility. Sure, it has certain applications along these lines, and you can even drag two files into simple checksum for an instant hash-compare, but checksum is strictly for creating and verifying checksums.

Your whole procedure sounds extremely convoluted. If you tell me precisely what you are trying to accomplish, I probably know of a better way for you.

;o)

Cor

ps. You can removed ALL the comments with a single regex replace in any decent text editor.

I have zero experience with checksums but here is what I am trying to accomplish.

I will be generating data files each day to a flash card. I need to be sure that the files are not altered.

Is there a way that the software can reside on the flash card and alert me if any file has been tampered with since its original creation?

Cor

Yes i ended up using a file compare on two .hash files to see if they were identical. But that requires using another program...

What i need is a single number (eg a MD5) for an entire directory. Not one number for each file in that directory (recursively). For my purposes that file ended up being ~66kb requiring some kind of program to test. If there is a differing ordering of files in a directory a comparison program is confusing.

Nice work on your app, will be better when you sort out the 4Gb SHA1 calculation issue.

What your app does not do that i originally downloaded it for is this.

I have 2 EXISTING folders say

C:\movies

Z:\movies.Backup

and they both have identical (un-verified) files in them.

I want to delete the original c:\movies as i have watched them but want to keep a backup.

How can i do this?

These are measured in the TB so copying is not an option as they took backup jobs near 200hrs to do.

At the moment i am using ztree2.0(binary compare) to do this but,

its not a simple click option and i do not know what the crc/md5 method is.

I was thinking to run your check on both the 2 different folders to create the md5 hash files but then how can i compare both sets.

Your solution or Thoughts would be much appreciated.

MrCyberdude, comparing files, just drop them both onto Simple Checksum. Job Done.

As for directories; I do this sort of thing a fair bit myself, like this..

gheil, that simple technique will also work fine for you. Understand, you CANNOT checksum directories, per se. Only the files in them. If you really must checksum a directory as a single unit, you will need to tar/zip/archive it, first.

;o)

Cor

I downloaded the checksum but when i tried to upload it this was the message showed to me

"The Compressed (Zipped) Folder'C:Users\DELL\Downloads\checksum.zip' is invalid"

Though i am Just a beginner and really love to learn Hacking as a whole course of my Life.

I love it Please Help me out. with this as a start and i will be going on to be a STUDENT here. NO SHAME bro

The Corrupt Zip is as old a technical dilemma as the zip itself, most likely older! You wanna hack data systems, you will need to learn how to deal with it. In this case it's as simple as downloading again; not always so, though.

Your reference to shame interests me, but I don't have time to delve. Suffice to say, the best hackers mostly start by hacking themselves. ;o)

my PC has died. It says the checksum files don't match (paraphrasing)and it won't boot. It takes none of my recovery disks and wants the whole Vista installation disk which I don't have.

I am unable to uninstall checksum. When I attempt it via the uninstall option in PROGRAMS, it brings up a text file. Checksum does not show up in Windows ADD-REMOVE SOFTWARE.

Or else, simply delete the checksum program folder and user prefs folder, job done. Aside from the data mentioned above, there's nothing else left on your system. ;o)

Is there a way run this on a folder (recursively), and then have the changed sub-folders and files logged before the new checksum is written?

i am a student and wishes to learn more about hashes and the encrytption decryption processes. consider me just as a beginner.

is that possible to find out the serials of small light weight programs using brute force? is there any programs concerned to it? i have a program called ophcrack, but doesnt know how to use it.

are hases concerned to it any way?

and pls do tell me how to make a torrent file if i have a whole movie file myselves.

thanks in advance.

pls reply .

Great checksum! I was wondering is there a way to make the root file automatically go to the fallback location, and not the directory being scanned. (when the folder is not read-me). I want to run the checksum on some folders which I can right on the network, but not alter, or add to them in anyway.

If you had a command that could change the root destination that wold be awesome!

I second Dave's proposal, and also be able to run verify on a read-only directory with a different hash location but without needing a hash with absolute paths.

If you are referring to files on an entirely different volume (DVD, etc.), then how will checksum know where the files are without the absolute paths stored in the hash file? ;o)

I love your Programm and I am happy to have found it. But there are a few Questions and Options a would like.

1. Working a directory tree recursively, Is there a way to create individual checksums for each file and at the same time another in the root folder (cr1 and cri combined)? So when I need the two options I don't have to run the task twice.

2. Doubble Clicking a file in Windows Explorer which file extension has no assigned programm runs checksum, is this indended or is it ab bug? How can I switch it of?

3. The version history shows 1.1.6b as latest version, where can I download it?

4. I read, that you consider checksum "only" to create and check hashes, but couldn't you implement an comparison funktion. E.g. creating a hash and open an inputbox where to paste a second hash and compare the two hashes? Or klicking a hash file like for checking it, but than opening an inputbox where to paste a second hash and compare them?

Or do you a Programm that could just that?

5. I would love a more sophisticated Synchronize funktion. With options to remove the hashes of deleted files to clean the checksum files and to recheck files which have been changed since the last synchronization. It might be done by either storing filesizes and date in the checksumfile or by using the archive bit, resetting it after checking a file. Then checking a file again in case the archive bit is set again.

But anyway checksum is the best Programm I found for checksums. Thank you very much!

3 DVD burners, 3 copies of the same image, no existing files on hdd, but want to confirm all three DVDs are identical. I assume checksum/hash, but I'm new to this, so if you could give me some pointers. What would be the best, accurate, and fastest way to go about it

Well for more information, there are 9 dvd burners, running through different SCSIs, but some comp (but are all assigned drive letters), but only 3 copies of each image, so the ultimate goal would be able to run 3 different checks on 3 hopefully identical images all at once with the 9 drives (unless it goes really fast, have no clue how long each check takes on a full dvd). Unforcinally the DVD burner software/device I use don't have any verifying options :( so i assume this is the way to go, and possibly to run 3 of the same program, to verify the 3 DVDs each. Any help at all would be greatly appreciated

Hi corz.

First and foremost, thank you for the very, very, very fast checksum-tool. I have one feature-request though:

The ability to verify a checksum-file with relative paths against the relevant folder, where-ever that folder might be situated. More verbosely, I would like to situate my hash-files in a separate folder from the hashed files, so that I can move those files around and still be able to verify them without having to move the hash-files around as well. Thanks!

/archivist

I found your site interesting but still not clear to me what i have read. I will visit again soon if i have time.

The thing really interesting to me about the use of checksum. Maybe it just difficul to understand.

i come back soon in your site.

Godspeed...

Hi,

Realising this is probably due to deficient thinking, I can't seem to find where the logs being written. I thought it would be with my checksum.ini file, but there is nothing there and a system-wide search for log (or html) files doesn't show any that are obviously (as in written in the past day or so) belonging to checksum.

The command I'm using is:

""c:\Program Files\corz\checksum\checksum.exe" cils d:\"

My understanding was that cils would create a sha1 hash for each file and log it. d: is a writable drive.

Only checksum.ini is at %userprofile%\AppData\Roaming\corz\checksum\ nor is there a directory at %userprofile%\log\, %appdata%\log. Can't think of other places it would be hidden.

I've been through checksum.ini and other pages on this site but for the slow-witted it doesn't seem that file locations are that apparent.

P.S. Realising is British spelling, not at all improbable.

During verification, if any logs are created checksum will, by default, open that folder for you at the end of the operation, no need to search.

;o)

ps. "realize" and "realise" are both valid here in Scotland.

Thanks for your reply.

Ah right. I missed the switches that are only activated on verification. So, the 'l' switch isn't for logging everything?

I guess it shall still suit my purposes, with verification. The intention is to emulate end to end filesystem checksum; a poor man's ZFS checksumming if you like. Though I would like to know every time the checksum is created, and how many times previously checksums had been created. Kinda an anti-tamper process except it is about data corruption rather than security.

All said and done, this software is awesome, and you can count on a donation and promotion.

In the checksum.ini file, I love how verbose you are in explaining what you are intending with each item. Unfortunately most of the explanations go over my head.

P.S. Okay, why did your website tell me 'realise' is an "improbable" word?

This has got to be the most comprehensive MD5 hasher I've found which meets 95% of my needs.

I've a couple of questions which I'm not completely clear about

1) when i synchronize, will deleted files be updated into the .hash? and will changed files be updated into the .hash as well?

2) will it be possible to create a .hash in each folder recursively. i can foresee the need to move subfolders and i would want to check the integrity. yet i don't have to create a .hash at each folder manually.

for example, this is my folder structure... which i've hashed at E: level.

e:\photos

e:\photos\2010

e:\photos\2010\jan...

e:\photos\2011

e:\photos\2011\jan...

e:\videos

e:\videos\english

e:\videos\french...

e:\stuff

e:\stuff\i like

e:\stuff\i hate...

and i want to copy all the photos in e:\photos\2011 for my friend. i would now need to rehash at that level again. am i making any sense?

thanks!

Hi Cor,

Firstly let me say fantastic program. You have managed to include many features but still kept it useable!

I am just using checksum from a scheduled script to verify my backup files are still good after they are transferred over a WAN connection. My backup program actually generates the MD5 hashes internally.

So far everything is working fine except I have around 400GB in total of files to check. What I really want to do is check just the few hundred MB of updated files after they arrive at the destination and go over the whole set of files once a month or so.

Can I selectively verify the hashes directly in checksum bussed on the archive bit on the files? Once the check is passed I could then reset the archive bit.

If it can't be done directly in checksum can checksum selectively verify files based on an input file list? I shouldn’t have to much trouble automatically generating the input file based on files with the archive bit set and then using the same input file to reset the bit once the test has successfully completed.

This is the command line I’m currently using and I have made some tweaks to the ini file for the stuff I couldn’t do on the command line.

checksum.exe" vflrq-t %target%\ >> %logfile% 2>&1

Love to hear your Ideas on solving this problem.

Thanks

Yes! It's called a .hash file!

You have to understand, during verification checksum isn't rummaging through your filesystem checking archive bits or anything else, it is reading the .hash file (or .md5 or whatever) that you feed it. If you feed it a system path, checksum scans for all available .hash files. Once checksum has its .hash file(s), it simply checks all the files listed within.

If your backup software if producing hashes, it may be possible for it to pipe the new hashes to a single file, even a temporary file, which checksum could work with.

But really, 400GB isn't so much. If you set all this to happen when you are in bed, you won't even notice it has, unless there are errors (checksum will (optionally) pop open the log folder).

;o)

ps. be careful with the "l" switch, it can produce HUGE logs.

Hi Cor

Thank you for a very useful program. It really is a must-have on any computer. I have been using it for checking copies of folders made for archival purpose, works perfectly.

One problem I do have, if you can suggest a solution. I inadvertently did individual checksums instead of "root" on a number of folders containing large amounts of photo-files, ending up with hash-files on every photo. How can I remove the hash-files instead of only hiding them?

Thank you.

If you view the results by "details", you can order by date, size, etc., makes it easy to delete only the ones you want gone.

;o)

Hi Cor

First I wanna thank you for your program is really unique!, also I have a problem, I have bunch of files on a drive and I have already checksumed them but I have to re check them with my girlfriend and I am sure that we will delete some of them, so what can I do to re synchronize everything? I know about the syncronize switch but when a file is deleted it just shows sha 1 missing, I wanna delete all the hashes of the deleted files to avoid the "sha 1 missing" errors.

If you were 100% certain that all the missing files were intentionally missing, you could have checksum go straight to manipulating the existing .hash file(s), removing entries for your "approved" missing files.

checksum does most of this already, right up to the point where the existing .hash files are altered/replaced. Something along these lines is planned for a future version.

If you use a "root" .hash file and you are handy with some form of scripting language, I'm sure it wouldn't be too difficult to create a script that parsed checksum's log (searching for "MISSING") removing those entries. Of course, you would need to instruct checksum to output plain text logs (XHTML logging is the default).

With a "root" .hash file it's also a trivial operation in your text editor, with a little regex magic, of course.

Failing that, the easiest way to approach this currently is to:

Now you have up-to-date, error-free hashes for the entire volume. If the volume is large, do the last part when you are sleeping.

While I'm here I should mention that the upcoming beta of checksum, as well as enabling you to differentiate between CORRUPT and CHANGED files, has the ability to toggle the reporting and logging for these and MISSING files, you can simply ignore them!

Also i have another problem when I verify the checksums, I get that error of "cannot create hashes even on the fallback directory" something like that I have read your site and it says that maybe is because my drive is read only but that is not the case.

Thanks in advance!

But it may be something else. Try re-installing checksum and moving/removing any checksum-created fall-back folders. The installer is completely non-destructive, so it won't affect your settings.

If you are still having issues, mail me with rough details of your computer setup and a copy of your checksum configuration (ini) file, I'll look into it.

;o)

Thanks Cor for the quick reply and easy solutions, for my first problem I did what you told me I verified all, then deleted the hashes.

And for my second problem, it was because the files where very deep in the drive (folders and folders and folders...) and maybe because it was a web page saved, so I deleted that and I have just created a root hash.

So Thanks for your help again and for your great software!

Hi,

Trying to modify options with Shift and Ctrl keys before launching but all I get is a help page. Help needed.

I want to compare a set of folders to see if all the files are in place and, after that, to verify the integrity of the files. How should I proceed to achieve the result?

Thanks in advance. Congratulations for the soft. Regards.

Hi,

"Are you saying that when you hold a) <SHIFT> or b) <Ctrl> during launch you get a help page instead of the a) options dialog and b) force verify controls? Seriously? Nah, I misunderstand you, surely."

No, you did not misunderstand. That's exactly what happens. I am in Windows 7 64 bits. But it is not a helo page exactly, is a dialog that begins with " checksum [v1.2.3.9] was given nothing to do!"

Thanks in advance. Regards.

And of course, there's no point just launching checksum (unless you want to read the "checksum was given nothing to do" dialog), you need to launch it with something to do (i.e. from your Explorer context menu for a file/folder, drag & drop, etc.)

;o)

Hi Cor.

I have some files that I have previously checksumed that I know have changed (and know change a lot) but I want to re-checksum it prior to backing up to another drive so I can verify the checksum on the backup to make sure it has copied ok, and the backup is still good in the future.

I thought I could just run checksum with "qctry" but it doesn't seem to update the hash file if it already exists? I know synchonrise would add new files, but doesn't update existing hashes (which is entirely sensible, you generally wouldn't want to update the checksum to include any possible file errors!).

The only way I can get the behaviour I want is to delete the hash files and re-checksum, which is fine I guess - just wondered if there was a way to force checksum to regenerate the hashes itself?

Thanks in advance.

The best way to go about this would be, as you suggest, to verify the existing hashes, then ensure the error log contains only files you expected to be changed, then hash again (overwriting existing hashes).

If it's something you do a lot, because checksum can be controlled by the command-line, it should be easy enough to setup a wee batch script/batch runner set. ;o)

I got two different files in a folder with the same hash. Only one file gets written to the hash file. I would love to get a switch (I use command-line) to hash and list all files, even if they have the same hash. If the second file isn't written, you might never know if it gets corrupted.

BTW - Great App!

Please mail me full details, including the full names of all the files in the dir, what command-line you are using, and the resultant .hash file. If you can, zip and send the entire dir to me.

;o)

Right you are, the file "folder.jpg" is by default ignored in the prefs. Sorry for the trouble.... I found this after sending the zipped folder... so you can ignore the email. Thanks a lot.

Hi Cor,

About a month ago, I told you that the soft was not working with Shift or Ctrl keys before launching. Still the same :-( I am using an HP Pavilion with 8 Gb Ram, Windos 7 and no success. What I want is to generate hash files for a number of files in a folder to compare with another one. What I mean is that if I have 100 files in folder A and 120 files in folder B, I want to generate a hash file for each one of the files in each folder to compare them later. How can I achieve this (with command line I mean) provided I can't launch the program eny other way?

Thanks in advance. Regards.

Hi Cor,

Just tested in Windows XP and it works normally. Any suggestion?

Thanks in advance. Regards.

As for the folder compare, checksum isn't really designed for this task, but creating a standard checksum file for the folders (standard right-click on folder, choose "Create checksums") is all you need. To compare with another folder simply copy over the .hash file and click it.

;o)

I have been using checksum to verify files i archive to my LG NAS N1A1. When i run verify on a local file on my computer, checksum works very fast like it is supposed to. But when i run verify on a copy of the file over the local network which resides on my NAS box, it takes forever. Why is this and is there anything that i can do about it?

Basically, anything you can do to speed up your network (and there is usually a lot you can do), will speed up checksum. It's waiting for data from your NAS. Check your network settings thoroughly, and consider gigabit ethernet (compared to a modern hard disk read, even a super fast LAN is DEAD SLOW). checksum won't be the only program that will benefit from improved network speeds.

;o)

Hi Cor,

Everything working fine now! Just that I did not press the key until the interface appears. You should emphasize that the the user must press the key until checksum appears. Otherwise, dummies like me will be doing the idiot around for a while

Thanks a lot. I will purchase a shirt, sure!

Hi Cor,

I have done several copies of DVDs to hard disc. Now, I want to compare each DVD with the correspondent folder. How should I proceed to compare both? For each folder I can generate a hash file but how do I proceed with the DVD as it is read-only. And, once generated the hash file for both, how do I compare them?

Thanks in advance. Regards.

P.S.: I am already a pride buyer of your shirt

The entire structure will be recreated, so you can simply drag and drop the whole thing over to the copy, if need be. Verify normally with the Verify checksums.. command.

Remember, if you have already hashed the copy, you will need to rename one of the (sets of) .hash files or else copying over the directory structure will overwrite the copy's .hash files!

So it's best to simply begin with hashing the DVD. If all goes to plan and there are no errors when you copy the .hash files over, your DVD .hash files can become your copy's .hash files! Job done!

checksum has a number of configurable methods of dealing with read-only fallback conditions, as well as a myriad of configurations for .hash file naming, so if this is something you do a lot, you will probably want to drop checksum.ini into a decent text editor (one with syntax highlighting) and have a scroll.

By the way, nice shirt!

;o)

Hi Cor,

Thanks for your answer. By the way, I can't find the file checksum.ini. I have registered with the program itself but I have been looking in the corresponding folder but there is no checksum.ini (and I have done a search in all the PC of course). Your advice would be great.

Thanks and regards.

As well as the absolute best way to get to checkusm.ini, there's also a link there ( and right here! -> ) to the brief checksum.ini page.

Have fun with those prefs!

;o)

Hi,

I have copied several DVDs to corresponding folders in hard disc. Now I want to compare DVDs to folders. What I would like is to have a unique file hash for all the DVD or folder (not a hash file with a list of file hashes in it). This is because each DVD has about 17.000 files. Is there any way to do so?

And another thing. Is there any way to make the process faster because to generate the hashes for a DVD it takes about an hour and a half. I have 78 DVDs, so it will take me about 14 days working 8 hours a day :-(

Thanks in advance. Congratulations for the software and best regards.

As it is, simply hash the disk as normal, perhaps with a root hash file, and then copy that to the hard drive folder for verification.

And what's wrong with having a .hash file with all the files in it? 17,000 files is no problem for checksum. I've got .hash files with hundreds of thousands, maybe millions of entries (I know for a fact my local archive drive .hash has over 750,000 entries).

File hashing is a superior system fo disk (image) hashing -- if one single file is damaged, with a disk hash, you have total checksum failure and no way of knowing which file is damaged. Ouch! It's a lot easier to locate and renew one single file than 17,000 of them!

As for making it faster, checksum will hash the disk as fast as your operating system can read it. My workstation is ancient and I can hash a DVD in a few minutes - it sounds like you need to upgrade your DVD reader - it will only cost a couple of man-hours or less for a decent fast model. Think of the savings!

By the way, I recommend Pioneer for DVD drives.

;o)

I second Ricard's request.

The "tip and tricks" page describe how to checksum a burnt CD or DVD by first dumping the media contents in an ISO file with ImgBurn, then using the corz checksum utility on the ISO file.

One really cool feature would be to bypass the need for ImgBurn, and directly create or verify the checksum of the bytes on the burnt volume.

Thoughts, Cor?

For those that really need this sort of functionality, ImgBurn is an excellent program, beautifully simple and intuitive to operate, but with all the advanced features a geek could need. If you have a DVD drive or ever handle disk images, it's an essential tool for your kit-bag.

;o)

Hi Cor,

Thank you for your answer. You write:

Actually, I sometimes use a Windows machine to handle ISOs that are not meant to be mounted on Windows, such a Linux-created ISOs. These ISOs often contain file paths that are too long to be read by the Win32 API, and thus, the file hashing is not reliable. However, the checksum of the full ISO is still possible and meaningful. Hence my request.

Thank you,

--Fred

Hi Cor,

Great App!. So far I have been able to do nearly everything I want with it, only one thing is missing. The ability to make a single file hash with absolute path. Maybe I missed a switch or a combo of switches, I just cant make a single file hash with absolute path. I hope this is a "lack of reading on my end" type of post, if not then I guess it's a request type

Paskoe

Hi Cor,

Here is my scenario.

I hash files on my PC's internal hard-drive with a copy of the 4 level directory structure of my two archive drives (one is redundancy). Some hashes are complete directories, others are single files. I then transfer to the archive drives. I check the hashes to verify good transfer. All my hashes are put in the root of my archive drives for easy referencing of the files. I put a copy of the hashes in a backup folder for peace of mind (I use a little app that adds the drive letter to the hashes in just a few clicks). I also append all new hashes to a master hash file for the whole drive (I am aware of Checksum's synchronization feature, but that means rehashing the new files and that is extra work/time I can easily do without). I can thus check the whole drive, if I need to transfer the whole drive (or to verify drive health) or just check one single hash when I need to pull just that file from the archive, all the while having a functional backup of the hashes.

Presently, I am adding the paths manually, until I find a better solution, quite tedious work.

I fully understand that this doesn't seem sensible (my wife is always telling me that I am not sensible enough, go figure!). Adding paths to single file hashes is only good if you don't want your hash files in the directory your file is in. I am guessing most people are content with the hash file right next to the hashed file. Unfortunately, that is not my case. I hope this is enough to convince you, if not, the search goes on. I would really appreciate an all-in-one hash tool for my needs.

Thanks for listening!

Have a great day!

Cheers,

Paskoe

I guarantee you will save time compared to your current method, because you don't have to *do* anything, at least not manually. Let checksum do the leg-work!

Also note, during synchronization, there is no "rehashing", only files that do not already exist inside the .hash file will be hashed. Existing hashes are ignored.

I can certainly look into adding absolute paths for all contexts, but I think if you try letting checksum take care of this you will save yourself a lot of hassle.

;o)

Greetings - first of all, thank you so much for this wonderful tool!

I am wondering - is it not possible to create files with .md5 extension rather than .hash extension when using the batch checksum generator on directories? It seems you only have this option when creating checksums from the files themselves. I am guessing there may be a good reason for this limitation...

Thank you sir!

unified_extension=false

And ALL checksum files will have an .md5 or .sha1 extension, regardless of the context.

;o)

All I want is a simple syntax to create 1 checksum file for 1 input file that I can place in a bat file.

I don't want ini files created all over the shop in every user that happens to invoke the command

I don't want popups, ever.

I don't want helpful crap

I want a formal syntax that does exactly 1 thing. This is the simplest possible use of a command line yet ...

checksum.exe -qualifier < myfile.txt > myfile.hash

;o)

Hi Cor,

Excellent work on checksum, impressive amount of effort to cater for (so many!) various options. Still having some trouble to make it perform the way I'd like though. I'm wondering whether I've misunderstood the .ini settings, and whether you could explain.

The problem: I need to protect an entire OS inside a virtual machine from user-tampering, more specifically, from introducing their own executables renamed as OS .exes, .dlls, coms, and such. (I can prevent any unrecognised process from executing using other means). So I set out_dir to some location users can neither see nor access, and run checksum.exe cr on c:\windows\system32 with a mask for *.exe,*.dll etc, set absolute_paths=true, and all .hash files are created in my destination dir as expected.

But when I wish to verify, I call checkum v <hash-path>, and I get a log with errors for every single file as "missing" because it apparently still expects each hash file in the associated source dir. (It works fine if hashes are written out there.) Unfortunately, this is not an option for me because even with hidden attribute it would be trivially easy for users to find the hashfiles there, figure out that it's md5 or sha1, and edit it to match the rogue executable that is to replace the original. I could do a checksum on the hashfiles themselves, and so on, but life's too short for infinite recursion.

So my question is, what .ini settings would cause checksum.exe to look for the source files in their original location regardless of where the hashfiles themselves are stored? Despite your extensive documentation in ini and on the web, I haven't figured it out yet. What might I be doing wrong?

Thanks in advance for any help you may be able to provide.

one_hash=true

in the ini file. Currently, checksum only creates absolute paths for root .hash files. For you needs, a single .hash file sounds like a better option, anyway.

;o)

Hello.

First and foremost, thank you for your hash tool.

I'm trying to create a checksum with the parameter "1sq" of the following folder/file:

"z:\Filme_1\Die Geschichte des Jungen, der geküßt werden wollte.The Story of a Boy Who Wanted to Be Kissed.L'histoire du garçon qui voulait qu'on l'embrasse.Französisch\L'Histoire du Garcon qui voulait qu'on l'Embrasse.Fr.avi"

Most likely due to the path and file name length checksum isn't able to store the sha1 file in the folder. To me it appears that the hash creation itself is done.

Do you see any solution to that problem?

Thanks.

indigital

ps. Upgrade to the latest version, LONG PATHs are now supported.

Hi corz

I'm writing here, cause I've send 2 emails to you, but they were left without a response.

First of all great program, and the registration procedure(purchase procedure) made me smile a bit. You have pretty good sense of humour.

Ok, to the main point of my message. I like the synchronize option, but one thing I'm missing and I think it would benefit the application, is checking for the files that are not there anymore, and while creating checksum with the "synchronize" option, to remove those entries from the hash file created before.

Can you implement this feature in the next version of corz checkusm?

ps. When you will be responding to this message can you let me know that you did on the email, so that I don't have to come here every day and check it manually?

cheers

Lucas

First thing, great work! So far, it looks like it already does just about everything I need. What I really need is the command line version to return a pass/fail that I can easily capture so I can have a script perform certain operations in case of failure. What I am thinking is using this as part of a Jenkins job (a plugin for Jenkins might be a potential add on for you?? hint-hint-nudge-nudge) where if the checksum fails, I send an email to folks about the failure.

;o)

Hello,

I can say that I'm very happy with your nice checksum tool. The only missing crucial function is to support the handling of multiple selected files/folders. Very often, I have to place checksums that way, but at different locations. So preparing a separate script is no option at all.

Please add support for selecting multiple files/folders.

Thank you

Chris

Great software.

I was wondering if it's possible to create one hash of an entire directory structure so the .hash file has one hash in it.

I want to be able to compare that 2 directories (Main & backup) are identical.

Thanks

If you do this a lot, consider adding a custom command for it to your Explorer's context (right-click) menu - there's a section on how to do this on this very page!

;o)

Hello,

this seems to be a very great and valuable tool. As I've been looking for it for a time now, I'm really happy and thankful. Tried around and everything works great - good usability and sooo fast under Windows.

And with a little, very easy, customization the hash-files are compatible to md5deep under lx, too.

I will use this tool mainly for ensuring integrity and consistency among my multimedia directory trees (mainly photos), including backup / archive trees and current photo workflow.

There is one remaining question, a cmdline switch combination (or ini setting) I must have missed and I simply cannot find it in the online docu or by playing around.

It's regarding hash verification.

When I verify a larger tree, I can easily find (and get a report about) missing and changed files.

But at the same time I would like to know whether there are any new files in the tree (valid files that match the pattern, are not excluded, but do not have any line in the hash-file).

It would be like ... calling checksum vyr, which does not work together (can be called together, but does not report new files).

To make a clear point (as English is obviously not my native language and what I write could easily be misleading):

I am just looking for a report / log of additional, unhashed files during a verification run.

Did I miss something very simply and obvious? Could you be so kind to help or hint me?

Or is the only solution to run checksum twice, once "vr" (to get missing and changed) and then "y" (to hash new ones). But with this attempt I did not find the possibility to obtain a summary log of the newly added files. Of course it would be preferable to get the new files just reported and have the possility to deceide whether to add them to the hashtable in a 2nd run (or simply to delete them if they are there for no good).

Thank you again and greetings from Austria,

Robert F.

If you want to create/add hashes, you need to run checksum in create mode (with synchrinize enabled/chosen). Any new hashes will simply be tagged on to the end of the .hash file. You can have checksum time-stamp the entries, so it's easy enough to see what was added, see: do_timestamp=false in your checksum.ini (set it to true, obviously!).

;o)

ps. thank you for your support! much appreciated!

Hello,

Here is my case:

I have a program that can create a bootable disc. I created 6 months ago one and decided to make an iso file from it and save it. Then, today I grab the same program and did again a bootable disc and created a new iso file as well just like the last time. Surprisingly, at least for me, both iso files have exactly the same checksum, I mean same md5 and SHA-1.

I thought every time I create an iso file from a CD the resulting file had a different hash or if I burned different bootable CDs with the same creator program and make iso files from each one, the resulting hash should be different. But no, it's the same.

Is this correct and I am missing something?

Thanks.

The real question is, what made you decide to create another bootable disk when you already have one?

;o)

Got a problem.

Just finished a full 4TB disk hash to root (1day 5hrs!!) and decided to check it was ok so ran checksum again using 'create' followed by synchronise not expecting to find anything, that would be my check. BUT ... it found a missing movie folder (Zero D*** Thi*ty) and began creating fresh checksums. As nothing had changed and there was literally only 5 mins between runs this must be a bug, yes? Something to do with the folder and files starting with 'Z' maybe?? Both runs of checksum finished with success but the first run did NOT check all of the disks filestore.

OK so I erroneously emailed you (vice using this form) about a feature request concerning adding Tiger and Whirlpool hashes to be compatible with hashdeep. Ignore that please.

What will REALLY be clutch, and will help the entire headless media server community like Limetech's Unraid community is if you can release a command line utility for linux. I know you've got a gui version, but it would be best if we could avoid having to do that on generally headless machines. We have the ability (still in beta at the moment) to create Docker Containers and KVM virtual machines that can run Windows or something with KDE, but that is really not an ideal situation for what most of us really need. What we need is a command line that can be scripted and added to cron

100% ideal solution - a slackware 14.1 command line build for native unraid use